Superintelligence

Incorporating technological singularities, hard AI take-offs, game-over high scores, the technium, deus-ex-machina, deus-ex-nube, AI supremacy, nerd raptures and so forth

December 1, 2016 — October 18, 2024

Suspiciously similar content

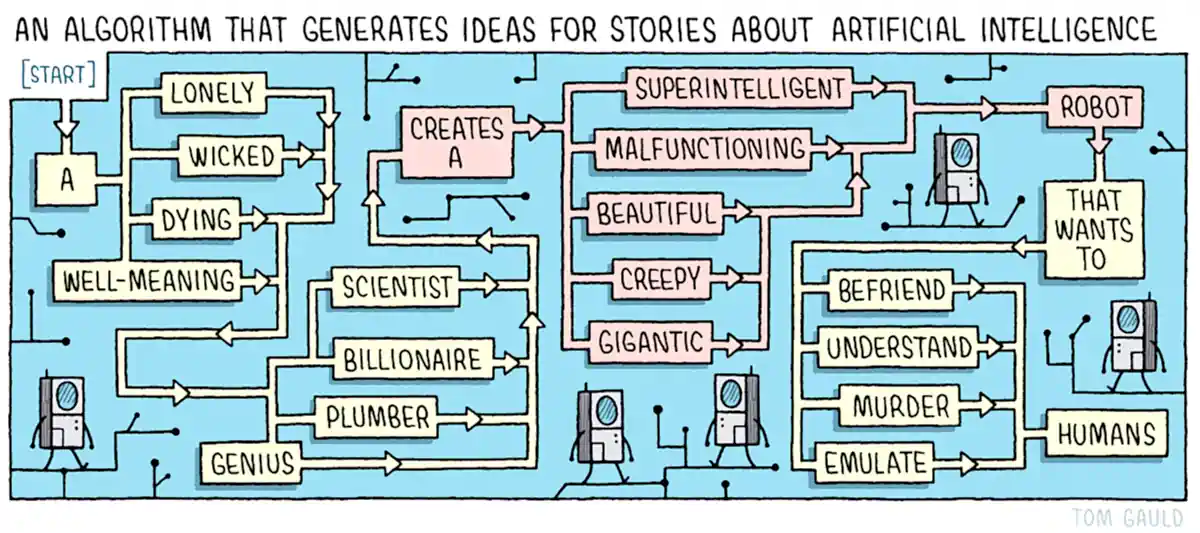

Small notes on the Rapture of the Nerds. If AI keeps on improving, will explosive intelligence eventually cut humans out of the loop and go on without us? Also, crucially, would we be pensioned in that case?

The internet has opinions about this.

A fruitful application of these ideas is in producing interesting science fiction and contemporary horror. I would like there to be other fruitful applications, but as they are, they are all so far much more speculative.

1 Safety, risks

See AI Safety.

2 What is TESCREALism?

An article has gone viral in my circles recently denouncing TESCREALism. There is a lot going on there; so much that I made a TESCREALism page to keep track of it all. tl;dr Mostly it’s one of those culture war things where some people argue who is on which team on social media.

3 In historical context

Various authors have tried to put modern AI developments in continuity with historical trends towards more legible, compute-oriented societies. More filed under big history.

Ian Morris on whether deep history says we’re heading for an intelligence explosion

Wong and Bartlett (2022)

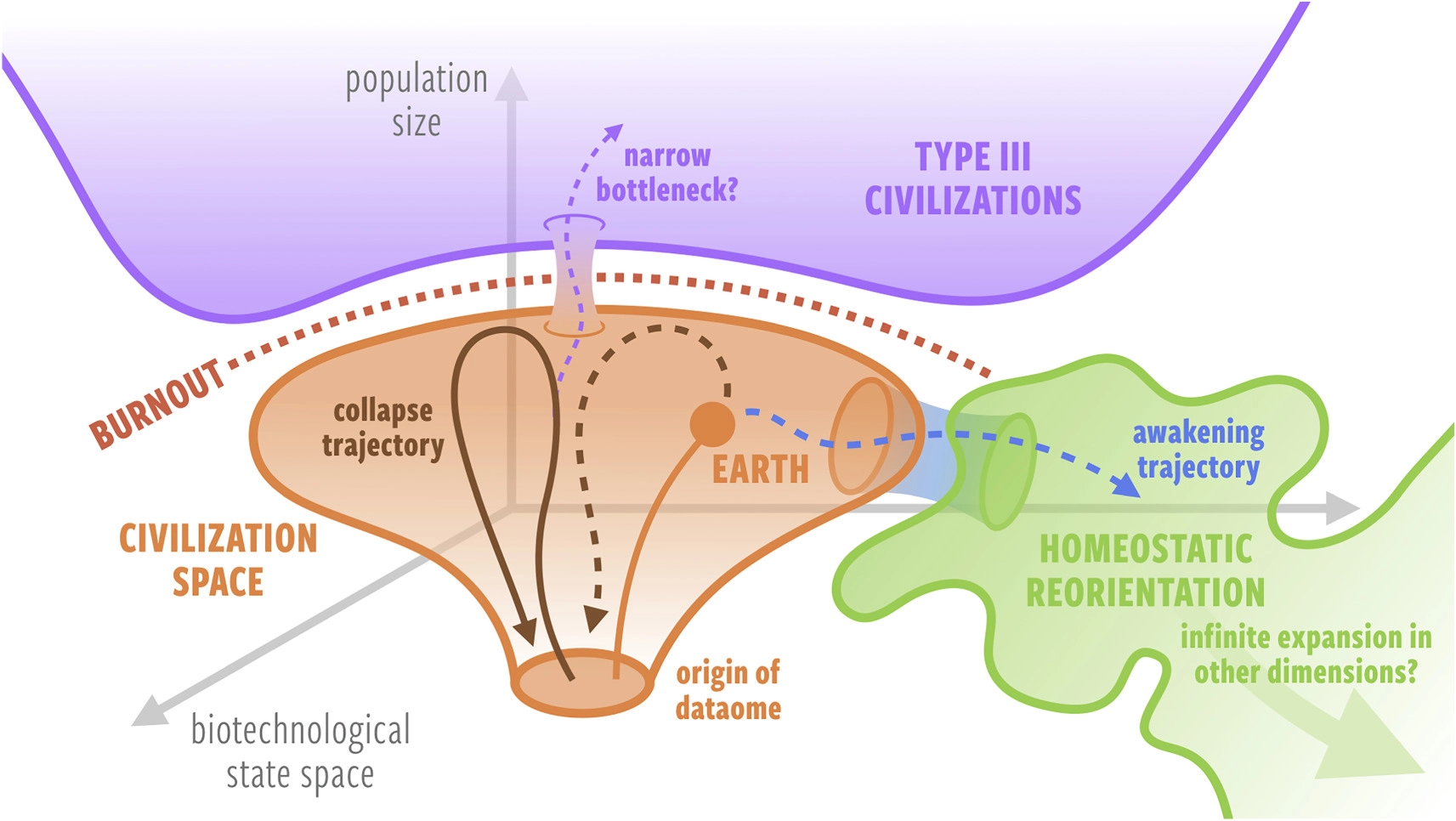

we hypothesize that once a planetary civilization transitions into a state that can be described as one virtually connected global city, it will face an ‘asymptotic burnout’, an ultimate crisis where the singularity-interval time scale becomes smaller than the time scale of innovation. If a civilization develops the capability to understand its own trajectory, it will have a window of time to affect a fundamental change to prioritize long-term homeostasis and well-being over unyielding growth—a consciously induced trajectory change or ‘homeostatic awakening’. We propose a new resolution to the Fermi paradox: civilizations either collapse from burnout or redirect themselves to prioritising homeostasis, a state where cosmic expansion is no longer a goal, making them difficult to detect remotely.

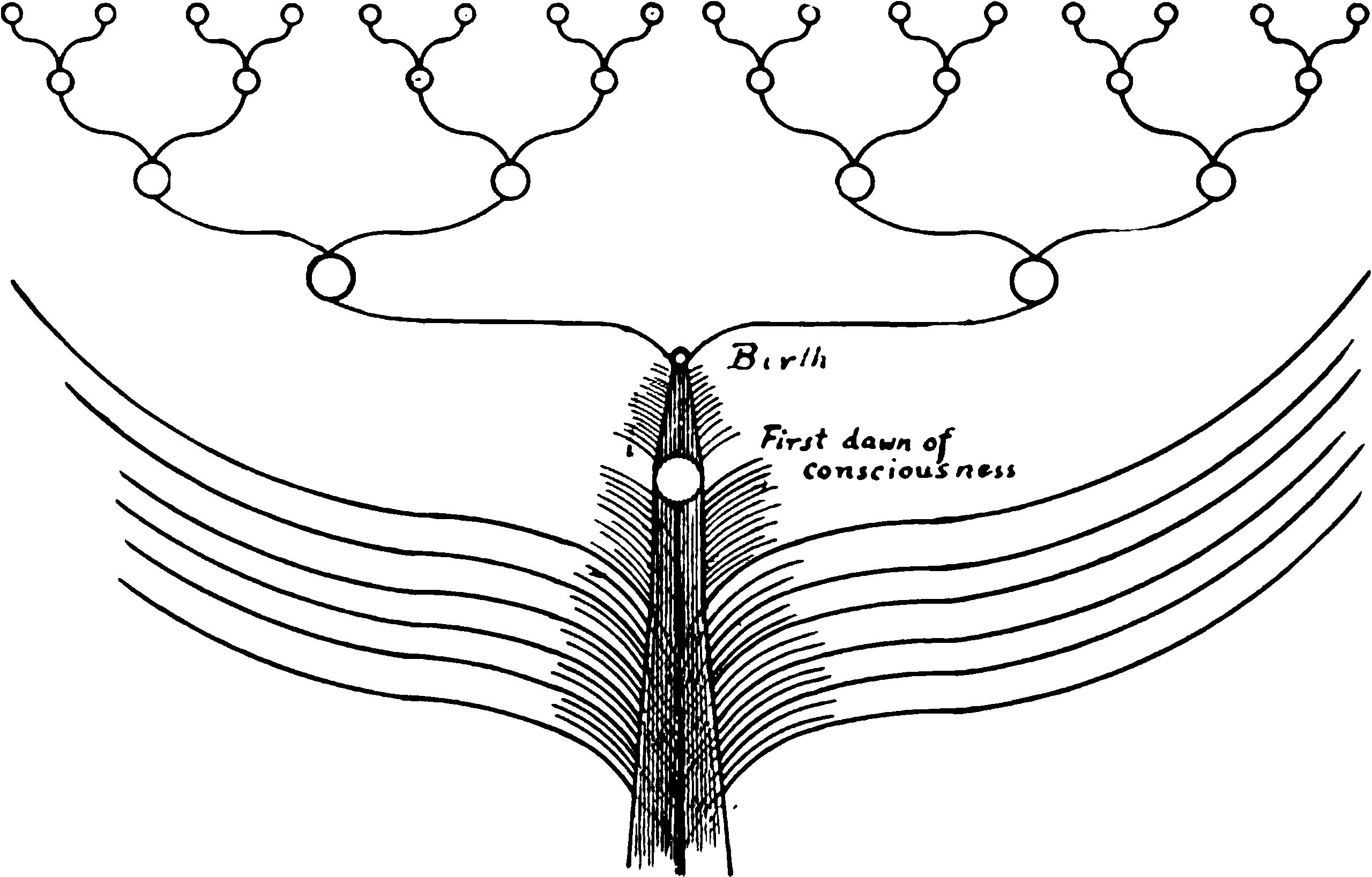

This leads to the question about whether we need computers to create AIs at all, or are we all already AIs?

3.1 Most-important century model

- Holden Karnofsky, The “most important century” blog post series

- Robert Wiblin’s analysis: This could be the most important century

4 Models of AGI

Hutter’s models

-

AIXI [’ai̯k͡siː] is a theoretical mathematical formalism for artificial general intelligence. It combines Solomonoff induction with sequential decision theory. AIXI was first proposed by Marcus Hutter in 2000[1] and several results regarding AIXI are proved in Hutter’s 2005 book Universal Artificial Intelligence. (Hutter 2005)

Prequel: An Introduction to Universal Artificial Intelligence - 1st Edition - M

ecologies of minds considers the distinction between evolutionary and optimising minds.

More to say here; perhaps later.

5 Aligning AI

Let us consider general alignment, because I have little AI-specific to say yet.

6 Constraints

6.1 Compute methods

We are getting very good at efficiently using hardware (Grace 2013). AI and efficiency (Hernandez and Brown 2020) makes this clear:

We’re releasing an analysis showing that since 2012 the amount of compute needed to train a neural net to the same performance on ImageNet classification has been decreasing by a factor of 2 every 16 months. Compared to 2012, it now takes 44 times less compute to train a neural network to the level of AlexNet (by contrast, Moore’s Law would yield an 11x cost improvement over this period). Our results suggest that for AI tasks with high levels of recent investment, algorithmic progress has yielded more gains than classical hardware efficiency.

See also

6.2 Compute hardware

TBD

7 Omega point etc

Surely someone has noticed the poetical similarities to the idea of noösphere/Omega point. I will link to that when I discover something well-written enough.

Q: Did anyone think that the noösphere would fit on a consumer hard drive?

“Hi there, my everyday carry is the sum of human knowledge.”

8 Incoming

Where did Johansen and Sornette’s economic model (Johansen and Sornette 2001) go? I think it ended up spawning Efferson, Richerson, and Weinberger (2023) Sornette (2003) and then fizzled. I am not really sure if either of those is “about” superintelligence per se but it looks like superintelligence might be implied.

Henry Farrell and Cosma Shalizi: Shoggoths amongst us connect AIs to cosmic horror to institutions.

Ten Hard Problems in and around AI

We finally published our big 90-page intro to AI. Its likely effects, from ten perspectives, ten camps. The whole gamut: ML, scientific applications, social applications, access, safety and alignment, economics, AI ethics, governance, and classical philosophy of life.

Artificial Consciousness and New Language Models: The changing fabric of our society - DeepFest 2023

Douglas Hofstadter changes his mind on Deep Learning & AI risk

François Chollet, The implausibility of intelligence explosion

Ground zero of the idea in fiction, perhaps, Vernor Vinge’s The Coming Technological Singularity

Stuart Russell on Making Artificial Intelligence Compatible with Humans, an interview on various themes in his book (Russell 2019)

Attempted Gears Analysis of AGI Intervention Discussion With Eliezer

Kevin Scott argues for trying to find a unifying notion of what knowledge work is to unify what humans and machines can do (Scott 2022).

Hildebrandt (2020) argues for talking about smart tech instead of AI tech. See Smart technologies | Internet Policy Review

Speaking of ‘smart’ technologies we may avoid the mysticism of terms like ‘artificial intelligence’ (AI). To situate ‘smartness’ I nevertheless explore the origins of smart technologies in the research domains of AI and cybernetics. Based in postphenomenological philosophy of technology and embodied cognition rather than media studies and science and technology studies (STS), the article entails a relational and ecological understanding of the constitutive relationship between humans and technologies, requiring us to take seriously their affordances as well as the research domain of computer science. To this end I distinguish three levels of smartness, depending on the extent to which they can respond to their environment without human intervention: logic-based, grounded in machine learning or in multi-agent systems. I discuss these levels of smartness in terms of machine agency to distinguish the nature of their behaviour from both human agency and from technologies considered dumb. Finally, I discuss the political economy of smart technologies in light of the manipulation they enable when those targeted cannot foresee how they are being profiled.

Everyone loves Bart Selman’s AAAI Presidential Address: The State of AI

Karnofsky, All Possible Views About Humanity’s Future Are Wild

- In a series of posts starting with this one, I’m going to argue that the 21st century could see our civilization develop technologies allowing rapid expansion throughout our currently-empty galaxy. And thus, that this century could determine the entire future of the galaxy for tens of billions of years, or more.[…]

- But I don’t think it’s really possible to hold a non-“wild” view on this topic. I discuss alternatives to my view: a “conservative” view that thinks the technologies I’m describing are possible, but will take much longer than I think, and a “skeptical” view that thinks galaxy-scale expansion will never happen. Each of these views seems “wild” in its own way.