Incentive alignment problems

What is your loss function?

September 22, 2014 — February 2, 2025

Suspiciously similar content

Placeholder to discuss alignment problems in AI, economic mechanisms, and institutions.

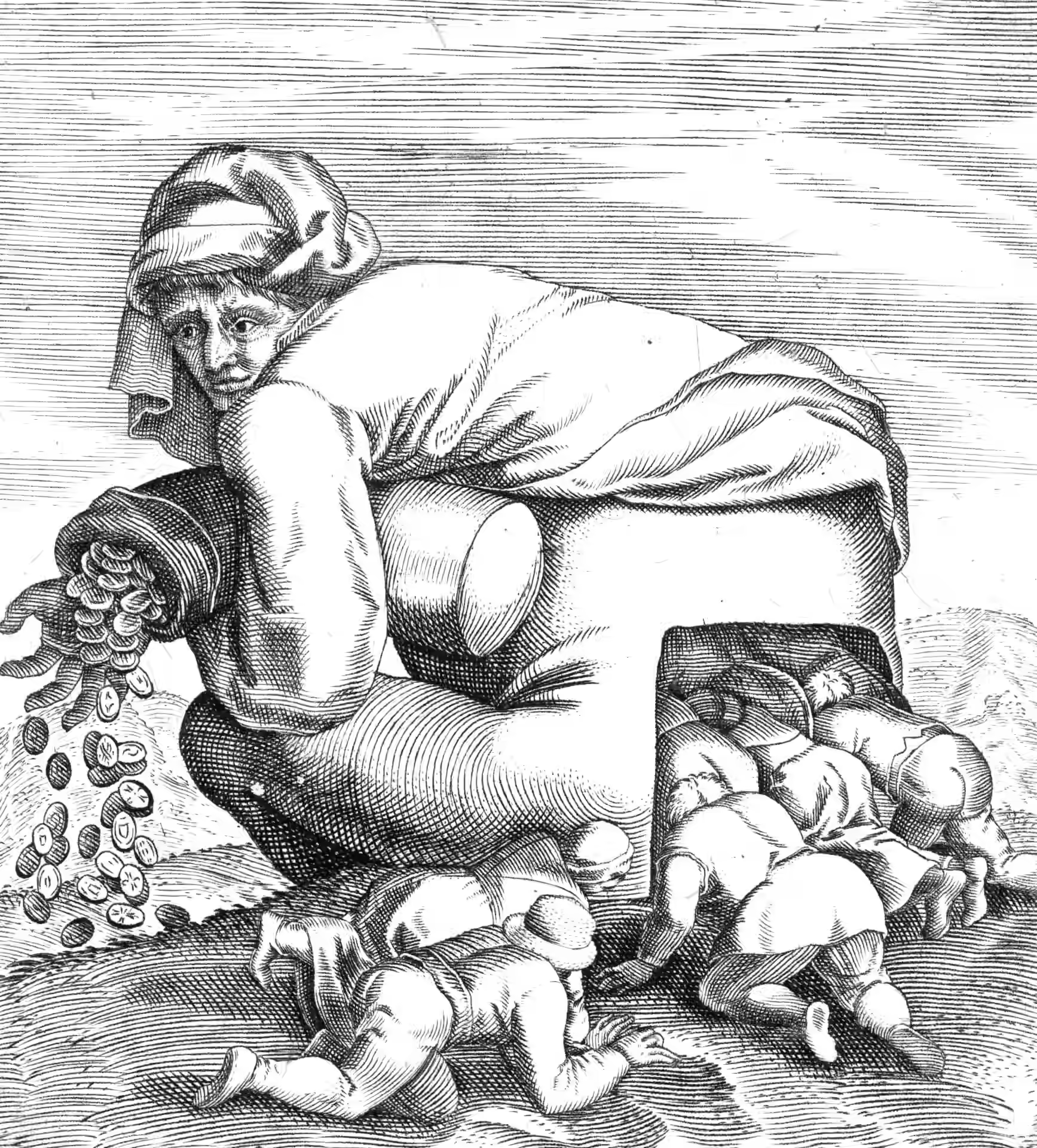

Many things to unpack. What do we imagine alignment to be when our own goals are themselves a diverse evolutionary epiphenomenon? Does everything ultimately Goodhart? Is that the origin of Moloch?

1 AI alignment

The knotty case of superintelligent AI in particular, when it acquires goals.

2 Incoming

Joe Edelman, Is Anything Worth Maximizing? How metrics shape markets, how we’re doing them wrong

Metrics are how an algorithm or an organisation listens to you. If you want to listen to one person, you can just sit with them and see how they’re doing. If you want to listen to a whole city — a million people — you have to use metrics and analytics

and

What would it be like, if we could actually incentivize what we want out of life? If we incentivized lives well lived.

Goal Misgeneralization: How a Tiny Change Could End Everything - YouTube

This video explores how YOU, YES YOU, are a case of misalignment with respect to evolution’s implicit optimization objective. We also show an example of goal misgeneralization in a simple AI system, and explore how deceptive alignment shares similar features and may arise in future, far more powerful AI systems.