The surveillance society

All-seeing like a state

February 8, 2017 — March 19, 2020

Suspiciously similar content

1 Corporate surveillance

The Quantified Other or How I help Facebook learn enough to replace me with Danbots

Big data, pre-existing conditions, the pan*icon, and the messy politics of monetising the confidential information of the masses for the benefit of the powerful. This is mostly opinion pieces; for practical info see Confidentiality, a guide to having it. Social surveillance as the new pollution.

Corporate Surveillance in Everyday Life:

Report: How thousands of companies monitor, analyse, and influence the lives of billions. Who are the main players in today’s digital tracking? What can they infer from our purchases, phone calls, web searches, and Facebook likes? How do online platforms, tech companies, and data brokers collect, trade, and make use of personal data?

Jathan Sadowski Draining the Risk Pool on the bloody edge the insurers have in this.

Back in 2002, legal scholars Tom Baker and Jonathan Simon argued that “within a regime of liberal governance, insurance is one of the greatest sources of regulatory authority over private life.” The industry’s ability to record, analyse, discipline, and punish people may in some instances surpass the power of government agencies. Jump nearly two decades in the future and insurance companies’ powers have only grown. As insurers embrace the whole suite of “smart” systems … — networked devices, digital platforms, data extraction, algorithmic analysis — they are now able to intensify those practices and implement new techniques, imposing more direct pressure on private lives.

TBD: discuss this connection with model fairness, and the utilitarian trade-offs of having hyper-fit people with good genes get and no need for privacy having affordable insurance, and people with private lives, meth habits and cancer genes being in the same “too expensive” box.

Rob Horning, The house we live in analyses the ecology of platform surveillance, trust and reciprocity.

Platforms don’t want to build trust; they want to monopolize it. The whole point is to allow for encounters that don’t require interpersonal trust, because platforms support an infrastructure that makes trust irrelevant. Platforms posit human behaviour not as contingent and relational but individuated, behaviouristic, and predictable. All you need is to collect data about people; this is sufficient to control and contain their interactions with each other in the aggregate over time. […]

It is in a platform’s interest that people find they can’t get along, can’t communicate, can’t resolve their issues. This strengthens their demand for a third-party mediator. So a platform will do what it can to make its rating systems put parties at cross-purposes.’

- The Age of Surveillance Capitalism, summarised by Daniel Miessler

- Palantir Knows Everything About you

- Social Cooling: an over-surveilled society is a risk-averse conformist society

Eric Limer, Here’s a Chilling Glimpse of the Privacy-Free Future:

Microsoft is able to index people and things in a room in real time. What that means, practically, is that if you can point a camera at it, you can search it. […]

Once you can identify people and objects by feeding the computers images of Bob and jackhammers so they can learn what each of those things look like, you can start applying a framework of rules and triggers on top of the real world. Only [Certified Employees] can carry the [Jackhammer] and [Bob] is a [Certified Employee] so [Bob] is allowed to carry the [Jackhammer]. The limits to what kind of rules you can make are effectively arbitrary. […]

The privacy implications, which Microsoft didn’t venture to mention on stage, are chilling even in a hospital or factory floor or other workplace. Yes, systems like this could ensure no patient collapses on a floor out of sight or that new hires aren’t juggling chainsaws for fun. But it also would make it trivial to pull up statistics on how any employee spends her day—down to the second. Even if it is ostensibly about efficiency, this sort of data can betray all sorts of private information like health conditions or employees’ interpersonal relationships, all that with incredible precision and at a push of a button. And if the system’s not secure from outside snooping? […]

Chris Tucchio, Why you can’t have privacy on the internet:

A special case of fraud which also relates to the problem of paying for services with advertising is display network fraud. Here’s how it works. I run “My Cool Awesome Website About Celebrities”, and engage in all the trappings of a legitimate website — creating content, hiring editors, etc. Then I pay some kids in Ukraine to build bots that browse the site and click the ads. Instant money, at the expense of the advertisers. To prevent this, the ad network demands the ability to spy on users in order to distinguish between bots and humans.

Facebook will engineer your social life.

Can you even get off facebook without getting all your friends off it?

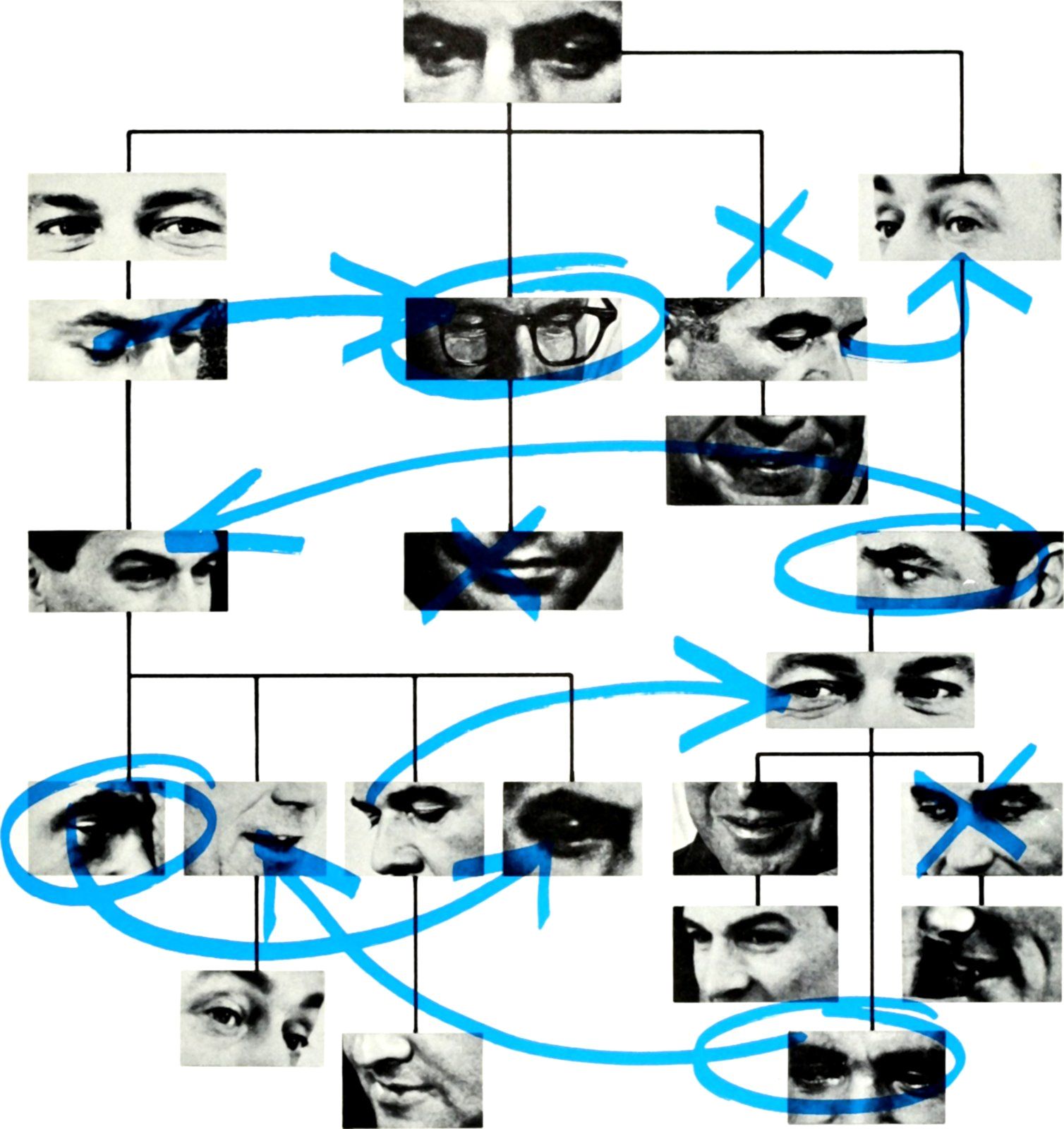

Connections like these seem inexplicable if you assume Facebook only knows what you’ve told it about yourself. They’re less mysterious if you know about the other file Facebook keeps on you—one that you can’t see or control.

Behind the Facebook profile you’ve built for yourself is another one, a shadow profile, built from the inboxes and smartphones of other Facebook users. Contact information you’ve never given the network gets associated with your account, making it easier for Facebook to more completely map your social connections.

Vicki Boykis, Facebook is collecting this:

Facebook data collection potentially begins before you press “POST”. As you are crafting your message, Facebook collects your keystrokes.

Facebook has previously used to use this data to study self-censorship […]

Meaning, that if you posted something like, “I just HATE my boss. He drives me NUTS,” and at the last minute demurred and wrote something like, “Man, work is crazy right now,” Facebook still knows what you typed before you hit delete.

For a newer and poetic backgrounder on this kind of stuff, read Amanda K. Green on Data Sweat, a.k.a. Digital exhaust.

This diffuse, somewhat enigmatic subset of the digital footprint is composed primarily of metadata about seemingly minor and passive online interactions. As Viktor Mayer-Schönberger and Kenneth Cukier write, it includes “where [users] click, how long they look at a page, where the mouse-cursor hovers, what they type, and more.” While at first glance this type of data may appear divorced from our interior, personal lives, it is actually profoundly embodied in even deeper ways than many of the things we intentionally publish — it is inadvertently “shed as a byproduct of peoples’ actions and movements in the world,” as opposed to being intentionally broadcast.

In other words, digital exhaust is shaped by unconscious, embodied affects — the lethargy of depression seeping into slow cursor movements, frustration in rapid swipes past repeated advertisements, or a brief moment of pleasure spent lingering over a striking image.

Or, more concretely, Camilla Cannon on the vocal surveillance in the call centre industry:

But vocal-tone-analysis systems claim to move beyond words to the emotional quality of the customer-agent interaction itself.

It has long been a management goal to rationalize and exploit this relationship, and extract a kind of affective surplus. In The Managed Heart, sociologist Arlie Hochschild described this quest as “the commercialisation of human feeling,” documenting how “emotional labour” is demanded from employees and the toll that demand takes on them. Sometimes also referred to as the “smile economy,” this quintessentially American demand for unwavering friendliness, deference, and self-imposed tone regulation from workers is rooted in an almost religious conviction that positive experiences are a customer’s inalienable right.

The Trust Engineers is a chin-stroking public radio show about how Facebook researches people. If you project it forward 10 years, this should evoke pants-shitting grade dystopia, when epistemic communities are manufactured to order by an unaccountable corporation in the interests of whomever.

Anand Giridharadas, Deleting Facebook Won’t Fix the Problem:

When we tell people to get off the platform, we recast a political issue as a willpower issue.

Left field and pretty, Listening back is a chrome extension that turns the cookies your websites use to track you into a weird symphony.

2 State surveillance

Somewhere between lawlessness and a Stasi reign of terror there might exist a sustainable respect of citizen privacy by the state. Are we having the discourse we need at the moment to discover that?

For practical info see Confidentiality, a guide to having it.

Mass control of the Uighur minority in Xinjiang is a probe in the future of possible surveillance states.

Our cost-cutting institutions may be leaking info. leaking our info. As Nils Gilman, Jesse Goldhammer, and Steven Weber put it: can you secure an iron cage?

Snowden

Arguing that you don’t care about the right to privacy because you have nothing to hide is no different than saying you don’t care about free speech because you have nothing to say.

Aaron Titus:

I have much to hide, for one simple reason. I cannot trust people to act reasonably or responsibly when they are in possession of certain facts about me, even if I am not ashamed of those facts. For example, I keep my social security number private from a would-be criminal, because I can’t trust that he’ll act responsibly with the information. I’m certainly not ashamed of my SSN. Studies have shown that cancer patients lose their jobs at five times the rate of other employees, and employers tend to overestimate cancer patients’ fatigue. Cancer patients need privacy to avoid unreasonable and irresponsible employment decisions. Cancer patients aren’t ashamed of their medical status; they just need to keep their jobs.

-

Over the past year, there have been a number of headline-grabbing legal changes in the U.S., such as the legalisation of marijuana in Colorado and Washington, as well as the legalisation of same-sex marriage in a growing number of U.S. states.

As a majority of people in these states apparently favour these changes, advocates for the U.S. democratic process cite these legal victories as examples of how the system can provide real freedoms to those who engage with it through lawful means. And it’s true, the bills did pass.

What’s often overlooked, however, is that these legal victories would probably not have been possible without the ability to break the law.

The state of Minnesota, for instance, legalised same-sex marriage this year, but sodomy laws had effectively made homosexuality itself completely illegal in that state until 2001. Likewise, before the recent changes making marijuana legal for personal use in Washington and Colorado, it was obviously not legal for personal use.

Imagine if there were an alternate dystopian reality where law enforcement was 100% effective, such that any potential law offenders knew they would be immediately identified, apprehended, and jailed. If perfect law enforcement had been a reality in Minnesota, Colorado, and Washington since their founding in the 1850s, it seems quite unlikely that these recent changes would have ever come to pass. How could people have decided that marijuana should be legal, if nobody had ever used it? How could states decide that same sex marriage should be permitted, if nobody had ever seen or participated in a same sex relationship?

[…] The more fundamental problem, however, is that living in an existing social structure creates a specific set of desires and motivations in a way that merely talking about other social structures never can. The world we live in influences not just what we think, but how we think, in a way that a discourse about other ideas isn’t able to. Any teenager can tell you that life’s most meaningful experiences aren’t the ones you necessarily desired, but the ones that actually transformed your very sense of what you desire.

We can only desire based on what we know. It is our present experience of what we are and are not able to do that largely determines our sense of what is possible. This is why same-sex relationships, in violation of sodomy laws, were a necessary precondition for the legalization of same-sex marriage. This is also why those maintaining positions of power will always encourage the freedom to talk about ideas, but never to act.

Stewart Baker asks How Long Will Unbreakable Commercial Encryption Last?

-

…a simple metric I use to assess the claims put forth by wannabe surveillers: simply relocate the argument from cyber- to meatspace, and see how it holds up. For example, Leslie Caldwell’s forebodings about online “zones of lawlessness” would be rendered thusly:

Caldwell also raised fresh alarms about curtains on windows and locks on bathroom doors, both of which officials say make it easier for criminals to hide their activity. “Bathroom doors obviously were created with good intentions, but are a huge problem for law enforcement. There are a lot of windowless basements and bathrooms where you can do anything from purchase heroin to buy guns to hire somebody to kill somebody.”

John D. Cook, A statistical problem with “nothing to hide”.

-

Admiral Michael Rogers, head of NSA and U.S. Cyber Command, described the Russian efforts as “a conscious effort by a nation state to attempt to achieve a specific effect” (Boccagno 2016). The former director of NSA and subsequently CIA, General Michael Hayden, argued, in contrast, that the massive Chinese breach of records at the U.S. Office of Personnel Management was “honourable espionage work” of a “legitimate intelligence target”.

Interesting case study in modern surveillance technology wherein the Tokyo cops can monitor who goes where via the CCTV.

Steven Feldstein’s report The Global Expansion of AI Surveillance:

presents an AI Global Surveillance (AIGS) Index—representing one of the first research efforts of its kind. The index compiles empirical data on AI surveillance use for 176 countries around the world.

Such tidbits as

- China is a major driver of AI surveillance worldwide. Technology linked to Chinese companies—particularly Huawei, Hikvision, Dahua, and ZTE—supply AI surveillance technology in sixty-three countries, thirty-six of which have signed onto China’s Belt and Road Initiative (BRI). Huawei alone is responsible for providing AI surveillance technology to at least fifty countries worldwide. No other company comes close. The next largest non-Chinese supplier of AI surveillance tech is Japan’s NEC Corporation (fourteen countries).

- Chinese product pitches are often accompanied by soft loans to encourage governments to purchase their equipment. These tactics are particularly relevant in countries like Kenya, Laos, Mongolia, Uganda, and Uzbekistan—which otherwise might not access this technology. This raises troubling questions about the extent to which the Chinese government is subsidising the purchase of advanced repressive technology.

- But China is not the only country supplying advanced surveillance tech worldwide. U.S. companies are also active in this space. AI surveillance technology supplied by U.S. firms is present in thirty-two countries. The most significant U.S. companies are IBM (eleven countries), Palantir (nine countries), and Cisco (six countries). Other companies based in liberal democracies—France, Germany, Israel, Japan—are also playing important roles in proliferating this technology. Democracies are not taking adequate steps to monitor and control the spread of sophisticated technologies linked to a range of violations.

- Liberal democracies are major users of AI surveillance. The index shows that 51 percent of advanced democracies deploy AI surveillance systems. In contrast, 37 percent of closed autocratic states, 41 percent of electoral autocratic/competitive autocratic states, and 41 percent of electoral democracies/illiberal democracies deploy AI surveillance technology. Governments in full democracies are deploying a range of surveillance technology, from safe city platforms to facial recognition cameras. This does not inevitably mean that democracies are abusing these systems. The most important factor determining whether governments will deploy this technology for repressive purposes is the quality of their governance.

Alexa O’Brien summarises: Retired NSA Technical Director Explains Snowden Docs.

3 Peer surveillance

Keli Gabinelli, Digital alarm systems increase homeowners’ sense of security by fomenting fear argues that our peer surveillance systems may not police the norms we would like them to:

Fear — mediated and regulated through moral norms — acts as a “cultural metaphor to express claims, concerns, values, moral outrage, and condemnation,” argues sociologist and contemporary fear theorist Frank Furedi. We process fear through a prevailing system of meaning that mediates and informs people about what is expected of them when confronted with a threat. It also poses a threat in and of itself, where simply feeling fear, or feeling insecure, equates to threat and insecurity. Ring, then, is a performative strategy to manage fears related to this sense of vulnerability. Despite posing itself as a tool to “bring communities together to create safer neighbourhoods,” it offers hardly any concrete opportunities to connect and bridge those divisions through anything but increased fear. Anonymous neighbours sit behind a screen with their own personal sets of anxieties, and map those beliefs onto common enemies defined by structural prejudices, siloing themselves into a worldview that conveniently attends to their own biases.

Caroline Haskins, How Ring Went From ‘Shark Tank’ Reject to America’s Scariest Surveillance Company:

When police partner with Ring, they are required to promote its products, and to allow Ring to approve everything they say about the company. In exchange, they get access to Ring’s Law Enforcement Neighbourhood Portal, an interactive map that allows police to request camera footage directly from residents without obtaining a warrant.

Robin Hanson’s hypocralypse is a characteristically nice rebranding of affective computing. This considers that surveillance-as-transparency might be a problem between peers also, as opposed to the classic asymmetrical problem of the elites monitoring the proles.

within a few decades, we may see something of a “hypocrisy apocalypse”, or “hypocralypse”, wherein familiar ways to manage hypocrisy become no longer feasible, and collide with common norms, rules, and laws. […]

Masked feelings also help us avoid conflict with rivals at work and in other social circles. […] Tech that unmasks feelings threatens to weaken the protections that masked feelings provide. That big guy in a rowdy bar may use new tech to see that everyone else there can see that you despise him, and take offense. Your bosses might see your disrespect for them, or your scepticism regarding their new initiatives. Your church could see that you aren’t feeling very religious at church service. Your school and nation might see that your pledge of allegiance was not heart-felt.

4 Incoming

- A quick guide to asking Cambridge Analytica for your data

- Confessions of a data broker and other tales of a quantified society.

- Laura Northrup: Police Charge Arson Suspect Based On Records From His Pacemaker

- Guilty before trial.

- Why we live in a dystopia even Orwell couldn’t have envisioned.

This kind of thing is tricky. How do you stop friends with crappy privacy hygiene? Privacy is a weakest-link kind of concept, and as long as Facebook can rely on a reasonable fraction of the population voluntarily and unconsciously selling the rest out, we are all compromised. I know that everything I do in front of my aforementioned friend will be obediently tagged and put on public display for the use of not only Facebook but any passing mobster, data miner or insurance company. The thing is, it is not sufficient if privacy-violating companies are able to get away with it if in principle experts could avoid some of the pitfalls; Social media is a habit-forming drug that potentially transmits ailments such as credit-score-risk, misinformation and confidential data breaches.

Is it consistent, particularly consistent, to regulate, say, alcohol, tobacco and gambling but not social media usage?

This is leaving aside the question of companies who sell your information no matter what you do or government-business alliances that accidentally leak your information.

Anyway, with blame for the abuse appropriately apportioned to the predators, let’s get back to what we, the victims, can do by taking what responsibility is available to us to take, for all that it should not be required of us.