Interpolation, extrapolation and memorisation in neural networks

January 24, 2022 — January 7, 2025

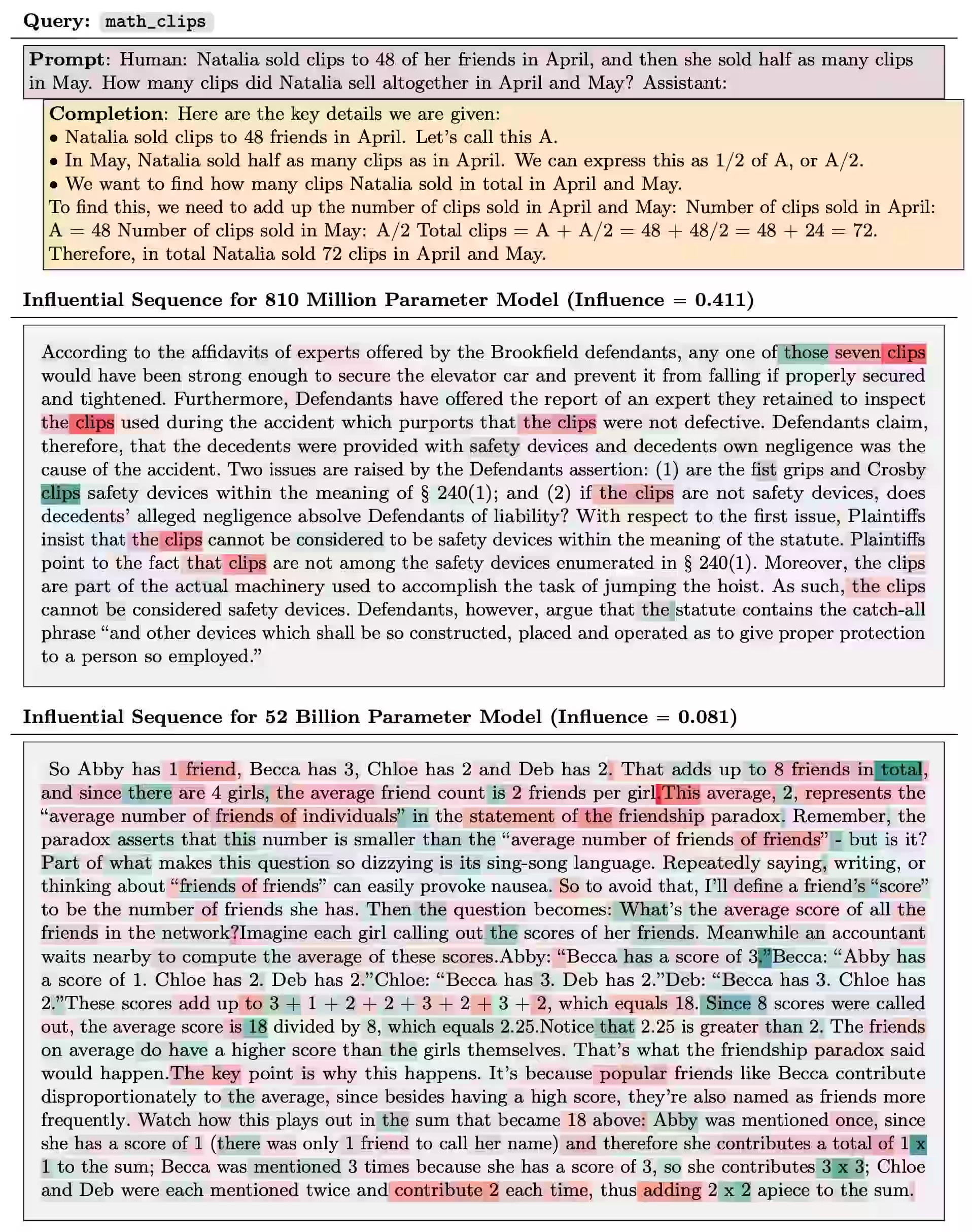

Suspiciously similar content

Interpreting models in terms of their ability to interpolate and memorise, and when that becomes extrapolation to new situations.

If you would like: can we train the models to be smarter than any examples they have been trained upon? When?

Connection to neural scaling, overparameterization, and possibly singular learning theory.

Keywords: Grokking, transcendence (E. Zhang et al. 2024), and plain old generalization. Sometimes the generalisation happens with interesting dynamics.

Balestriero, Pesenti, and LeCun (2021):

The notion of interpolation and extrapolation is fundamental in various fields from deep learning to function approximation. Interpolation occurs for a sample x whenever this sample falls inside or on the boundary of the given dataset’s convex hull. Extrapolation occurs when x falls outside of that convex hull. One fundamental (mis)conception is that state-of-the-art algorithms work so well because of their ability to correctly interpolate training data. A second (mis)conception is that interpolation happens throughout tasks and datasets, in fact, many intuitions and theories rely on that assumption. We empirically and theoretically argue against those two points and demonstrate that on any high-dimensional (>100) dataset, interpolation almost surely never happens. Those results challenge the validity of our current interpolation/extrapolation definition as an indicator of generalization performances.

Bubeck and Sellke (2021) argue

Classically, data interpolation with a parametrized model class is possible as long as the number of parameters is larger than the number of equations to be satisfied. A puzzling phenomenon in the current practice of deep learning is that models are trained with many more parameters than what this classical theory would suggest. We propose a theoretical explanation for this phenomenon. We prove that for a broad class of data distributions and model classes, overparameterization is necessary if one wants to interpolate the data smoothly. Namely we show that smooth interpolation requires \(d\) times more parameters than mere interpolation, where \(d\) is the ambient data dimension. We prove this universal law of robustness for any smoothly parametrized function class with polynomial size weights, and any covariate distribution verifying isoperimetry. In the case of two-layer neural networks and Gaussian covariates, this law was conjectured in prior work by Bubeck, Li and Nagaraj. We also give an interpretation of our result as an improved generalization bound for model classes consisting of smooth functions.

There are other works in this domain (Le 2018; Ma, Bassily, and Belkin 2018; C. Zhang et al. 2017, 2021).

I am not sure if this is a distinct thing from other double descent phenomena. Hastie et al. (2020) suggests perhaps not?

Interpolators — estimators that achieve zero training error — have attracted growing attention in machine learning, mainly because state-of-the-art neural networks appear to be models of this type. In this paper, we study minimum \(\ell_2\) norm (“ridgeless”) interpolation in high-dimensional least squares regression. We consider two different models for the feature distribution: a linear model, where the feature vectors \(x_i \in {\mathbb R}^p\) are obtained by applying a linear transform to a vector of i.i.d. entries, \(x_i = \Sigma^{1/2} z_i\) (with \(z_i \in {\mathbb R}^p\)); and a nonlinear model, where the feature vectors are obtained by passing the input through a random one-layer neural network, \(x_i = \varphi(W z_i)\) (with \(z_i \in {\mathbb R}^d\), \(W \in {\mathbb R}^{p \times d}\) a matrix of i.i.d. entries, and \(\varphi\) an activation function acting componentwise on \(W z_i\)). We recover — in a precise quantitative way — several phenomena that have been observed in large-scale neural networks and kernel machines, including the “double descent” behaviour of the prediction risk, and the potential benefits of overparameterization.

E. Zhang et al. (2024):

Generative models are trained with the simple objective of imitating the conditional probability distribution induced by the data they are trained on. Therefore, when trained on data generated by humans, we may not expect the artificial model to outperform the humans on their original objectives. In this work, we study the phenomenon of transcendence: when a generative model achieves capabilities that surpass the abilities of the experts generating its data. We demonstrate transcendence by training an autoregressive transformer to play chess from game transcripts, and show that the trained model can sometimes achieve better performance than all players in the dataset. We theoretically prove that transcendence can be enabled by low-temperature sampling, and rigorously assess this claim experimentally. Finally, we discuss other sources of transcendence, laying the groundwork for future investigation of this phenomenon in a broader setting.

Their case study is, yes, chess.

1 Grokking

Neel Nanda, Tom Lieberum, A Mechanistic Interpretability Analysis of Grokking

Grokking (Power et al. 2022) is a recent phenomenon discovered by OpenAI researchers, that in my opinion is one of the most fascinating mysteries in deep learning. That models trained on small algorithmic tasks like modular addition will initially memorise the training data, but after a long time will suddenly learn to generalize to unseen data.

This is a write-up of an independent research project I did into understanding grokking through the lens of mechanistic interpretability. My most important claim is that grokking has a deep relationship to phase changes. Phase changes, ie a sudden change in the model’s performance for some capability during training, are a general phenomenon that occur when training models, that have also been observed in large models trained on non-toy tasks. For example, the sudden change in a transformer’s capacity to do in-context learning when it forms induction heads. In this work examine several toy settings where a model trained to solve them exhibits a phase change in test loss, regardless of how much data it is trained on. I show that if a model is trained on these limited data with high regularisation, then that the model shows grokking.