Memetics

Taste dynamics, opinion dynamics, sincerely-held-belief dynamics etc

January 29, 2020 — May 14, 2022

Suspiciously similar content

Placeholder for the complex case of diffusion of innovation where the innovations in question are beliefs. Which beliefs prosper and which fail? How much do social dynamics determine how much a belief prospers? How much does factuality? How much earthy folk wisdom?

1 Innovation diffusion theory

Models such as the Bass diffusion model impose an epidemiological structure on the contagion of products, with a survival analysis flavour. See innovation diffusion.

2 Invasive arguments

In ecology, we have invasive species. In rhetoric, we have invasive arguments. I am collecting a library of these.

3 Belief

Is belief related to true states of the world, or is it a pure signifier of group membership, or something else again? Surely belief is a complicated phenomenon, but working out what aggregate of causes underlies any given belief is difficult for us for any given instance. The experience of having a belief is the same, as seen from inside our skulls regardless of what causes it.

3.1 The function of belief in individuals

David Banks’s diatribe depicts a particular kind of strategic belief:

“[Radiolab recasts] the political as endlessly unresolved scientific controversies, and act as science concern trolls,” he claims. These “explainerist” nuggets of satisfying factiness — why are they popular? One answer might be that they are a good marker of membership in a tribe that likes a certain kind of cocktail conversation.

What kind of beliefs prosper in society? What is the function of our truth claims? When should you believe “true” things, and what are true things anyway? Are true things about the objects of science the same as true things about society?

Goal: find a way of navigating the pragmatic functions of belief that sidestep the divisions in this anecdote:

I know this sounds like a story from some bad conservative novel, but it is not unheard of for rooms full of PhDs to applaud when someone says that, for example, witchcraft is just another way of knowledge and that disputing factual claims to its power is cultural hegemony.

To my ears, it’s the emphases that make this sound uncomfortable, rather than the broad-stroke outline. On one hand, I think that empirical fact is special in having a reality independent of human existence. On the other hand, I don’t suppose any of our epistemological methods give us perfect access to the reality I posit. Having claimed my beliefs are not, with 100% certainty, raw and unmediated rays of truth, I have opened the door to negotiating how certain my beliefs are, and admitting that other perspectives might have a point that I cannot dismiss a priori. I am all for admitting that our beliefs are uncertain and our categories subject to revision, otherwise why would I bother with statistics, which is my day job? Any specific claim generated by witchcraft is going to achieve a pretty low prior weighting in my decisions, however.

Also, how about beliefs that are not about facts as such? Does human knowledge transmission at large deal mostly in transmission of precise factual claims about reproducible experiments, or is there a whole bunch of other stuff going on with an indirect relationship to facts about gross physical reality, and some kind of active role in creating whatever passes for facts in the negotiated social reality?

Option B. We need the tools to unpack the other propensities in the uses of the language around belief, and disentangle what is going with cheap talk and signalling. We do deploy belief in a variety of ways, often emotional, often figurative. 1

How good are we at forming good facty beliefs? Scott Alexander found the irritating case study of bodybuilders suggests…. tl;dr: We are not very good at facty beliefs?

Antonio García Martínez, in The Holy Church of Christ Without Christ, belabours the point that faith-based engagement is predominantly how we engage with the world. Or, as Herbert Simon and Eliezer Yudkowsky could have co-authored, belief is how a heuristic feels from the inside.

3.2 Belief and group identity

4 Elite capture

See collective action.

5 Girardian mimetic violence

That’s mimetic not memetic, although there are points of contact. Girard apparently wrote about our desires being often about being something rather than having something. Alex Danco summarizes a few choice morsels:

at a deep neurological level, when we watch other people and pattern our desires off theirs, we are not so much acquiring a desire for that object so much as learning to mimic somebody, and striving to become them or become like them. Girard calls this phenomenon mimetic desire. We don’t want; we want to be.

I do not know what neurological level he is attempting to evoke. Perhaps some of that mirror neuron business. In any case, needs work, citation required.

Modern status forums like Instagram are designed explicitly to bring out this dual admiration/resentment emotion within us. Instagram’s real product isn’t photos; it’s likes. The photos and the events they depict are just the transient objects that bubble up to the surface; what really matters is the relationship between the people. But the fact that Instagram’s product is built around the objects and not the models isn’t an accident: it’s sneaky. It creates way more space and oxygen for resentment and desperation to grow beneath the surface. It’s not about the photo or what it depicts; it’s always about the other person.

Or see Byrne Hobart again:

We’re used to thinking of desire as something that emerges organically: you want something, and you try to get it. Sometimes, it’s easy; sometimes, there’s competition.

To Girard, that’s all wrong: you want something because of competition. Success is just a story you tell yourself about your desire for your rivals to fail.

6 Stand alone complex

Stand Alone Complex is a handy word in this domain.

A ‘Stand Alone Complex’ can be compared to the copycat behaviour that often occurs after incidents such as serial murders or terrorist attacks. An incident catches the public’s attention and certain types of people “get on the bandwagon”[…] It is particularly apparent when the incident appears to be the result of well-known political or religious beliefs, but it can also occur in response to intense media attention. For example, a mere fire, no matter the number of deaths, is just a garden variety tragedy. However, if the right kind of people begin to believe it was arson, caused by deliberate action, the threat increases drastically that more arsons will be committed.

What separates the ‘Stand Alone Complex’ from normal copycat behaviour is that the originator of the copied action is not even a real person, but merely a rumoured figure that commits said action. Even without instruction or leadership a certain type of person will spring into action to imitate the rumoured action and move toward the same goal even if only subconsciously.>The result is an epidemic of copied behaviour—with no originator. One could say that the Stand Alone Complex is mass hysteria-with purpose.

Directed use of this I have seen referred to as stochastic terrorism, as covered in economics of insurgence.

8 Hyperselection of transmissible beliefs

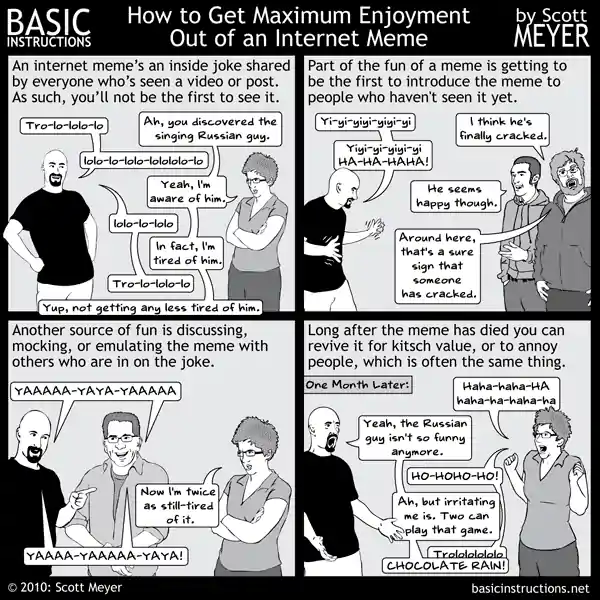

Yudkowsky’s memetic collapse post:

I’ve had the sense before that the Internet is turning our society stupider and meaner. My primary hypothesis is “The Internet is selecting harder on a larger population of ideas, and sanity falls off the selective frontier once you select hard enough”.

To review, there’s a general idea that strong (social) selection on a characteristic imperfectly correlated with some other metric of goodness can be bad for that metric, where weak (social) selection on that characteristic was good. If you press scientists a little for publishable work, they might do science that’s of greater interest to others. If you select very harshly on publication records, the academics spend all their time worrying about publishing and real science falls by the wayside. On my feed yesterday was an essay complaining about how the intense competition to get into Harvard is producing a monoculture of students who’ve lined up every single standard accomplishment and how these students don’t know anything else they want to do with their lives. Gentle, soft competition on a few accomplishments might select genuinely stronger students; hypercompetition for the appearance of strength produces weakness, or just emptiness.

A hypothesis I find plausible is that the Internet, and maybe television before it, selected much more harshly from a much wider field of memes; and also allowed tailoring content more narrowly to narrower audiences. The Internet is making it possible for ideas that are optimised to appeal hedonically-virally within a filter bubble to outcompete ideas that have been even slightly optimised for anything else. We’re looking at a collapse of reference to expertise because deferring to expertise costs a couple of hedons compared to being told that all your intuitions are perfectly right, and at the harsh selective frontier there’s no room for that. We’re looking at a collapse of interaction between bubbles because there used to be just a few newspapers serving all the bubbles; and now that the bubbles have separated there’s little incentive to show people how to be fair in their judgment of ideas for other bubbles, it’s not the most appealing Tumblr content. Print magazines in the 1950s were hardly perfect, but they could get away with sometimes presenting complicated issues as complicated, because there weren’t a hundred blogs saying otherwise and stealing their clicks. Or at least, that’s the hypothesis.

This kind of trap sounds like Moloch.

9 Pluralistic ignorance

A classic stylised phenomenon. See pluralistic ignorance.

11 Incoming links

Giulio Rossetti, author of various network analysis libraries such as dynetx and ndlib.

I work on public opinion, media and climate politics. My latest paper talks about how reporting on events looks very different depending on the ideological colour of the media outlet.

Writ large, Everything is Obvious (once you know the answer) is Duncan Watts’ laundry list of examples of how all our intuitions about how society works are self-justifying guesses divorced from evidence, except for his, because Yahoo let him build his own experimental online social networks.

- Contagion and self-fulfilling dynamics

- Minimum viable superorganism is a famed introduction to the prestige economy.

Via Byrne Hobart, Odlyzko and Tilly (2005):

This note presents several quantitative arguments that suggest the value of a general communication network of size \(n\) grows like \(n\log(n)\). This growth rate is faster than the linear growth, of order \(n\), that, according to Sarnoff’s Law, governs the value of a broadcast network. On the other hand, it is much slower than the quadratic growth of Metcalfe’s Law and helps explain the failure of the dot-com and telecom booms, as well as why network interconnection (such as peering on the Internet) remains a controversial issue.

12 References

Footnotes

And in any case, scientists at their most precise and factual still use emotion and metaphor to do communicative work. That is, I suspect, practically unavoidable, or worse, avoiding it would be inefficient.↩︎