Dunning-Kruger theory of mind

On anthropomorphising humans

June 18, 2020 — January 20, 2025

Suspiciously similar content

Content warning:

Complicated questions where we would all prefer simple answers

This piece’s title is a reference to the title of the influential paper Unskilled and Unaware of It (Kruger and Dunning 1999). In common parlance, what I want to evoke is knowing just enough to be dangerous but not enough to know your limits, which is not quite the same thing that the body of the paper discusses. Interpretation of the Dunning-Kruger effect model is a subtle affair which goes somewhat too far into the weeds for our current purposes. But see below.

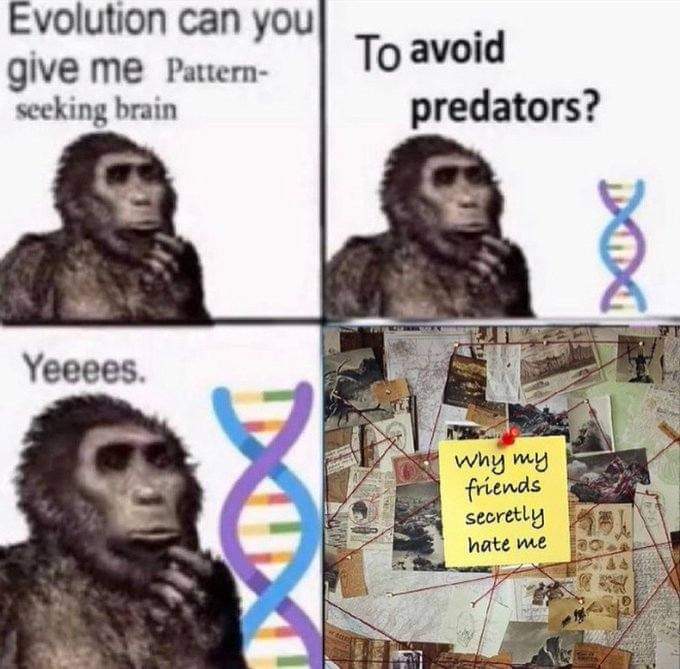

A collection of notes on our known-terrible ability to know our terrible inability to know about others and ourselves. Was that hard to parse? What I mean is that we seem to reliably

- think we know more about how people’s minds work than we do in fact know, and

- fail to learn that we do not know how people’s minds work.

To put it another way

Being a smart, perceptive, reasonable person is exactly what being a prejudiced, sanctimonious ignoramus feels like from the inside.

I’m currently enjoying calling this the podsnappery problem.

I am especially interested in how we seem to be terribly myopic at social perception despite being social creatures?

I do not attempt to document every way in which our intuitions about the world are defective (after all, were we born knowing all there was to know, we would be gods). Rather, I would like to collect under the rubric Dunning-Kruger theory of mind my favourite ways in which we reliably, regularly get other people and ourselves wrong, and fail to notice that we have got it wrong, in ways which are important to address for the ongoing health and survival of our civilisation.

Biases and failures which fit in this category are depressingly diverse; pluralistic ignorance, the out-group homogeneity effect, idiosyncratic rater effect, many other biases I do not yet know names for. I’ll add more as they seem relevant.

Related: Stuff we learn as kids that we fail to unlearn as adults.

Relevant picks from the Buster Benson summary, A comprehensive guide to cognitive biases:

- We are drawn to details that confirm our own existing beliefs. This is a big one. As is the corollary: we tend to ignore details that contradict our own beliefs. See: Confirmation bias, Congruence bias, Post-purchase rationalization, Choice-supportive bias, Selective perception, Observer-expectancy effect, Experimenter’s bias, Observer effect, Expectation bias, Ostrich effect, Subjective validation, Continued influence effect, or Semmelweis reflex.

- We notice flaws in others more easily than flaws in ourselves. Yes, before you see this entire article as a list of quirks that compromise how other people think, realize that you are also subject to these biases. **See: Bias blind spot, Naïve cynicism, or Naïve realism. […]

- We fill in characteristics from stereotypes, generalities, and prior histories whenever there are new specific instances or gaps in information. When we have partial information about a specific thing that belongs to a group of things we are pretty familiar with, our brain has no problem filling in the gaps with best guesses or what other trusted sources provide. Conveniently, we then forget which parts were real and which were filled in. See: Group attribution error, Ultimate attribution error, Stereotyping, Essentialism, Functional fixedness, Moral credential effect, Just-world hypothesis, Argument from fallacy, Authority bias, Automation bias, Bandwagon effect, or the Placebo effect.

- We imagine things and people we’re familiar with or fond of as better than things and people we aren’t familiar with or fond of. Similar to the above, but the filled-in bits generally also include built-in assumptions about the quality and value of the thing we’re looking at. See: Halo effect, In-group bias, Out-group homogeneity bias, Cross-race effect, Cheerleader effect, Well-traveled road effect, Not invented here, Reactive devaluation, or the Positivity effect. […]

- We think we know what others are thinking. In some cases this means that we assume that they know what we know, in other cases we assume they’re thinking about us as much as we are thinking about ourselves. It’s basically just a case of us modelling their own mind after our own (or in some cases, after a much less complicated mind than our own). See: Curse of knowledge, Illusion of transparency, Spotlight effect, Illusion of external agency, Illusion of asymmetric insight, or the Extrinsic incentive error.

Now, a catalogue of theory-of-mind failings that arise in life that I would like to take into account. There are many, many more.

Recommended for a fun general introduction to this topic, David McRaney’s You Are Not So Smart (McRaney 2012), which has an excellent accompanying Podcast with some updated material.

1 In popular discourse

- Evolutionary psychology is prone to some weirdness here. David Banks, Podcast Out appealed to me.:

[..]liberal infotainment is full of statements that sound like facts—what social media theorist Nathan Jurgenson calls “factiness” — that do nothing more than reinforce and rationalize the listeners’ already formed common sense, rather than transforming it: what you believed to be true before the show started was not wrong, it just lacked the veneer of factiness.

Each show delivers an old anecdote from an economist or a new study from a team of neuroscientists that shows “we may actually be hardwired to do” exactly what we feel comfortable doing. Cue the same word repeated by a dozen whispering voices, or a few bars of a Ratatat rip-off ambient band, and we’re on to a new book that argues organic food is not only good for you, it might make you a better person too. […] NPR’s podcasts depoliticize important issues by recasting them as interesting factoids to be shared over cocktails—stimulating but inherently incomplete. No one can act until we get more data; we must wait until Monday, when we get another round of podcasts.

He’s calling for more social science and deliberative non-positivist analysis, which is reasonable, although I don’t think it’s sufficient or feasibly part of the info-popcorn format of podcasts. I think there is other positivist science that doesn’t get a look-in too, but even with the whole smörgåsbord of science on display and optimally dot-pointed for mansplanation, are we ever going to really get deep understanding of society from such a shallow engagement?

Anyway, it’s more articulate than The Last Psychiatrist’s summary:

if you bring up This American Life I swear to Christ I’m coming over to set your cats on fire. “This week on This American Life, some banal idiocy, set to jazz breaks” — kill me

Nonetheless, that one did stick with me enough to ruin This American Life forever.

2 Dunning-Krugering Dunning-Kruger

Is the Dunning-Kruger effect itself real? Maybe. Dan Luu, Dunning-Kruger and Other Memes:

A pop-sci version of Dunning-Kruger, the most common one I see cited, is that, the less someone knows about a subject, the more they think they know. Another pop-sci version is that people who know little about something overestimate their expertise because their lack of knowledge fools them into thinking that they know more than they do. The actual claim Dunning and Kruger make is much weaker than the first pop-sci claim and, IMO, the evidence is weaker than the second claim.

The whole story is indeed more nuanced and contingent than the one you might pick up from the 10-word summary, and may be wrong, but that is a whole other thing.

This is an interesting case study in social psychology even. Does even having social psychology to hand help us with stuff?

- Social psychology is a flamethrower a rhetorically eloquent complaint about the political uses of too-easy theorising that we know what’s going on.

3 On evaluating others

The first problem with feedback is that humans are unreliable raters of other humans. Over the past 40 years psychometricians have shown in study after study that people don’t have the objectivity to hold in their heads a stable definition of an abstract quality, such as business acumen or assertiveness, and then accurately evaluate someone else on it. Our evaluations are deeply coloured by our own understanding of what we’re rating others on, our own sense of what good looks like for a particular competency, our harshness or leniency as raters, and our own inherent and unconscious biases. This phenomenon is called the idiosyncratic rater effect, and it’s large (more than half of your rating of someone else reflects your characteristics, not hers) and resilient (no training can lessen it). In other words, the research shows that feedback is more distortion than truth.

The real question is why individuals often try to avoid feedback that provides them with more accurate knowledge of themselves.

The Selective Laziness of Reasoning (Trouche et al. 2016):

many people will reject their own arguments—if they’re tricked into thinking that other people proposed them. […]

By “selective laziness”, Trouche et al. are referring to our tendency to only bother scrutinising arguments coming from other people who we already disagree with. To show this, the authors first got Mturk volunteers to solve some logic puzzles (enthymematic syllogisms), and to write down their reasons (arguments) for picking the answer they did. Then, in the second phase of the experiment, the volunteers were shown a series of answers to the same puzzles, along with arguments supporting them. They were told that these were a previous participant’s responses and were asked to decide whether or not the “other volunteer’s” arguments were valid. The trick was that one of the displayed answers was, in fact, one of the participant’s own responses that they had written earlier in the study. So the volunteers were led to believe that their own argument was someone else’s. It turned out that almost 60% of the time, the volunteers rejected their own argument and declared that it was wrong. They were especially likely to reject it when they had, in fact, been wrong the first time.

This is a kind of hopeful one, when you think about it. It means that we are better at identifying the flaws in each other’s arguments than our own. If only we could normalise critical feedback and not somehow turn it into blow-up flame-wars, we might find that collective reasoning is more powerful than individual reasoning. Indeed, is this precisely how science works?

4 On erroneously thinking my experience is universal

A.k.a. denying the reality of lived experience.

TBC. How do we mediate between our own experience and the experience of others when both are faulty representations of reality? How do we maintain both humility and scepticism at once? For bonus points, how do we do that even across a cultural divide?

5 On erroneously thinking my experience is not universal

TODO: write about Out-group homogeneity bias, and other biases that lead me to believe that other groups are unlike my group in spurious ways. The outgroup homogeneity bias is the one that says e.g. those people are all the same, not marvellously diverse like us.

This one seems ubiquitous in my personal experience, by which I mean that I experience it all the time in my own thoughts, and also, that other people seem very ready to claim that some outgroup is not only like that but that they are all like that.

TBC.

6 On understanding how others think

TODO: raid the following for references: Why You’re Constantly Misunderstood on Slack (and How to Fix It).

A connection to the status literature: People of higher status are more likely to think that those who disagree with them are stupid or biased — even when their high status is the result of a random process (Brown-Iannuzzi et al. 2021).

The researchers first analysed data from 2,374 individuals who participated in the 2016 American National Election Studies Time Series Survey, a nationally representative survey of U.S. citizens. As expected, liberals and conservatives were more likely to describe the opposing political party as uninformed, irrational, and/or biased compared to their own party.

Importantly, the researchers found that this was especially true among those with a higher socio-economic status. Among more liberal participants, higher status individuals displayed more naive realism toward Republicans. Among more conservative participants, higher status individuals displayed more naive realism toward Democrats.

In a follow-up experiment, the researchers experimentally manipulated people’s sense of status through an investment game. The study of 252 participants found that those who were randomly told they had performed “better than 89% of all players to date” were more likely to say that people who disagreed with their investment advice were biased and incompetent.

For ages my favourite go-to-bias to think on here was Fundamental attribution bias, which seems ubiquitous to me.

In social psychology, fundamental attribution error (FAE), also known as correspondence bias or attribution effect, is the tendency for people to under-emphasise situational explanations for an individual’s observed behaviour while over-emphasising dispositional and personality-based explanations for their behaviour. This effect has been described as “the tendency to believe that what people do reflects who they are”.

Apparently it has been called into question? (Epstein and Teraspulsky 1986; Malle 2006) TODO: investigate.

Naive realism is also a phenomenon of note in this category (Gilovich and Ross 2015; Ross and Ward 1996). David McRaney’s summary:

[N]aive realism also leads you to believe you arrived at your opinions, political or otherwise, after careful, rational analysis through unmediated thoughts and perceptions. In other words, you think you have been mainlining pure reality for years, and like Gandalf studying ancient texts, your intense study of the bare facts is what has naturally led to your conclusions.

Ross says that since you believe you are in the really-real, true reality, you also believe that you have been extremely careful and devoted to sticking to the facts and thus are free from bias and impervious to persuasion. Anyone else who has read the things you have read or seen the things you have seen will naturally see things your way, given that they’ve pondered the matter as thoughtfully as you have. Therefore, you assume, anyone who disagrees with your political opinions probably just doesn’t have all the facts yet. If they had, they’d already be seeing the world like you do. This is why you continue to ineffectually copy and paste links from all our most trusted sources when arguing your points with those who seem misguided, crazy, uninformed, and just plain wrong. The problem is, this is exactly what the other side thinks will work on you.

7 If we were machines would we understand other machines this badly?

TBD. Consider minds as machine learning and see what that would tell us about learners that learn to model learners. Possibly that means predictive coding.

8 Remedies

CFAR, putting money on the line, calibration. That example about set theory and cards. TBC.

9 On evaluating ourselves

I cannot even.

10 Moral wetware

See moral wetware.