Advice calibration

January 24, 2022 — January 13, 2025

Suspiciously similar content

See also advice HOWTO.

The problem of moving from advice that might be good in the mean at the population-level (eat less sugar) to individual-level advice (if one has an eating disorder maybe don’t sweat the sugar) is surprisingly hard.

C&C the Reverse advice you hear idea, although I think the framing in my head is broader and better, and the statistical problem that interaction effects are what we want but are hard to learn.

The concept I want to get at is something like the one in Bravery Debates: “I feel pretty okay about both being sort of a libertarian and writing an essay arguing against libertarianism, because the world generally isn’t libertarian enough but the sorts of people who read long online political essays generally are way more libertarian than can possibly be healthy.” Think about that for any outlier political philosophy though.

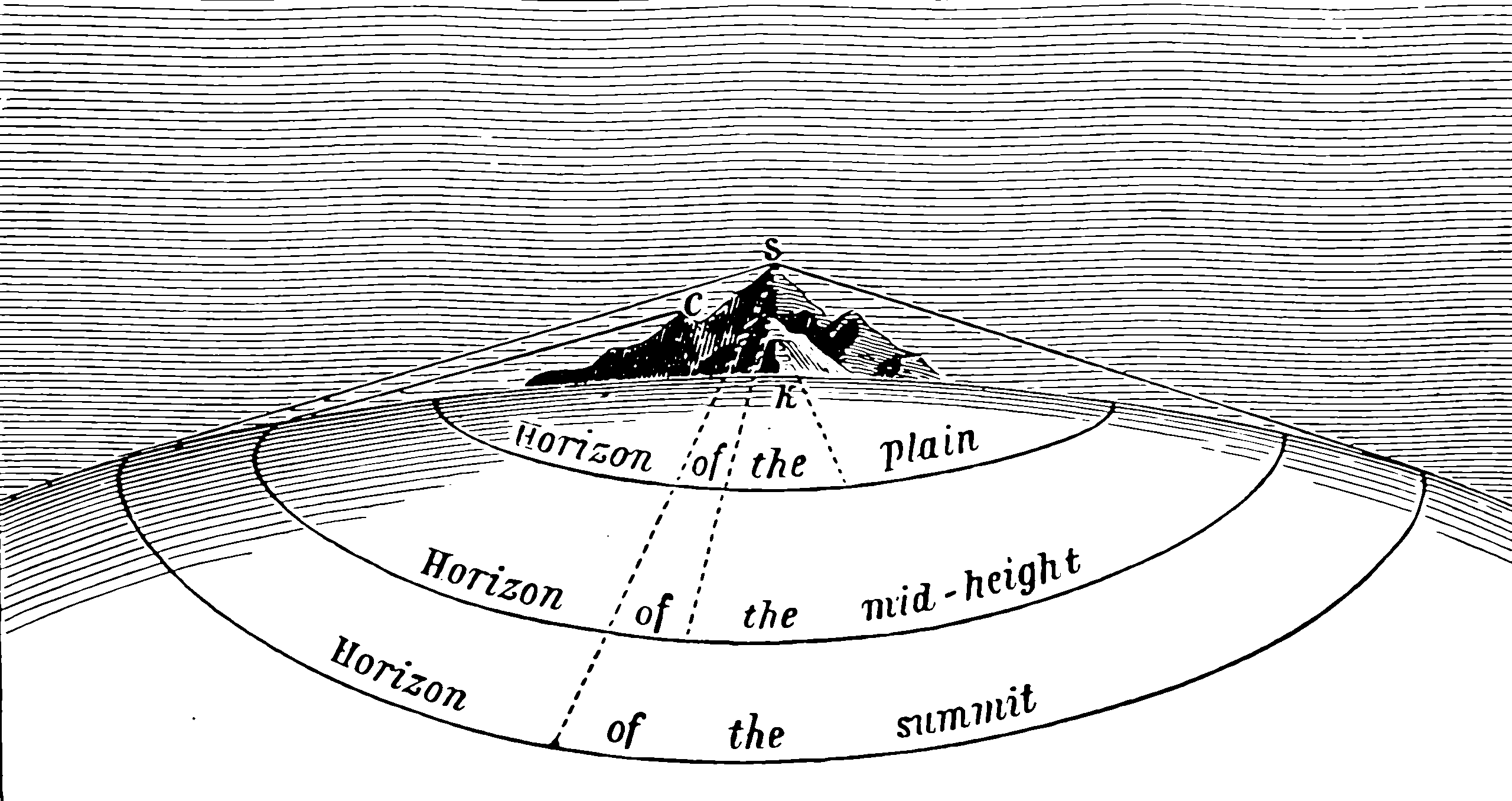

We can imagine advice as a statistical problem, where we need to get external validity in our advice. If we are also learners, should we not be concerned about how our own hypotheses generalise to the wider world outside our experience?

Every pundit has a model for what the typical member of the public thinks and directs their advice accordingly. For many reasons, the pundit’s model is likely to be wrong. The readers of various pundits are a self-selecting sample, and the pundit’s intuitive model of society is distorted. Even if they surveyed their readership, which is difficult to do right anyway.

So all advice like “People should do more X” is suspect because the advice is based on the author’s assumption that the readers are in class A but they could easily be in class B, who maybe should do less X, possibly because X does not work for class B people in general, or because class B people are generally likely to have done too much X and maybe need to lay off the X for a while.

This is a problem for general advice. The living world, and especially the social world, is made of adaptive systems, which are characterised by complicated control problems where the answer is often not “always more X” but rather “Hit the sweet spot of the perfect amount of X, not too little, not too much” where the “perfect amount” is a function of the context.

1 Alpha

Rohit draws a distinction of use in Beware The Idle Kantian where he describes the Idle Kantian problem:

Categorical imperative misapplied from thinking the question “if everyone did as I, would it be moral”, into the statement “if everyone did as I think it would be moral”.

To be an Idle Kantian is to believe that any proof that an action isn’t a universal law means that it’s not lawful and should be toppled forthwith.

The insight that we have trouble working out what the universal aspects of our platform are is nice. Indeed, sometimes we are OK at this:

… There are entire professions dedicated to not being Idle Kantians. Finance is one, where your entire edge often revolves around being able to do an action such that, if everyone else did it too, it will be made irrelevant. Writing is another, where the novel you write or the essay you write has to be one that you wanted to write, not the one that would be best if everyone else wrote too.

I believe the verbal shorthand for this is seeking alpha.

Sam Atis, in Most advice is pretty bad, shows the needle we might try to thread here:

I think good advice has three main components:

- It is not obvious

- It is actionable

- It is based on some true insight

2 Incoming

Almost all advice is contextual, yet it is rarely delivered with any context.… Without understanding the context, the advice is meaningless, or even worse, harmful. If those folks had followed their own advice early on, they themselves would likely have suffered from it. It is hard to escape this trap. We may be the culmination of our experiences, but we view them through the lens of the present.

-

Big picture, my impression is that advice just doesn’t seem to do all that much. Why?