Explainability of human ethical algorithms

October 9, 2023 — October 9, 2023

bounded compute

collective knowledge

cooperation

culture

ethics

gene

incentive mechanisms

institutions

machine learning

neuron

sparser than thou

utility

wonk

Suspiciously similar content

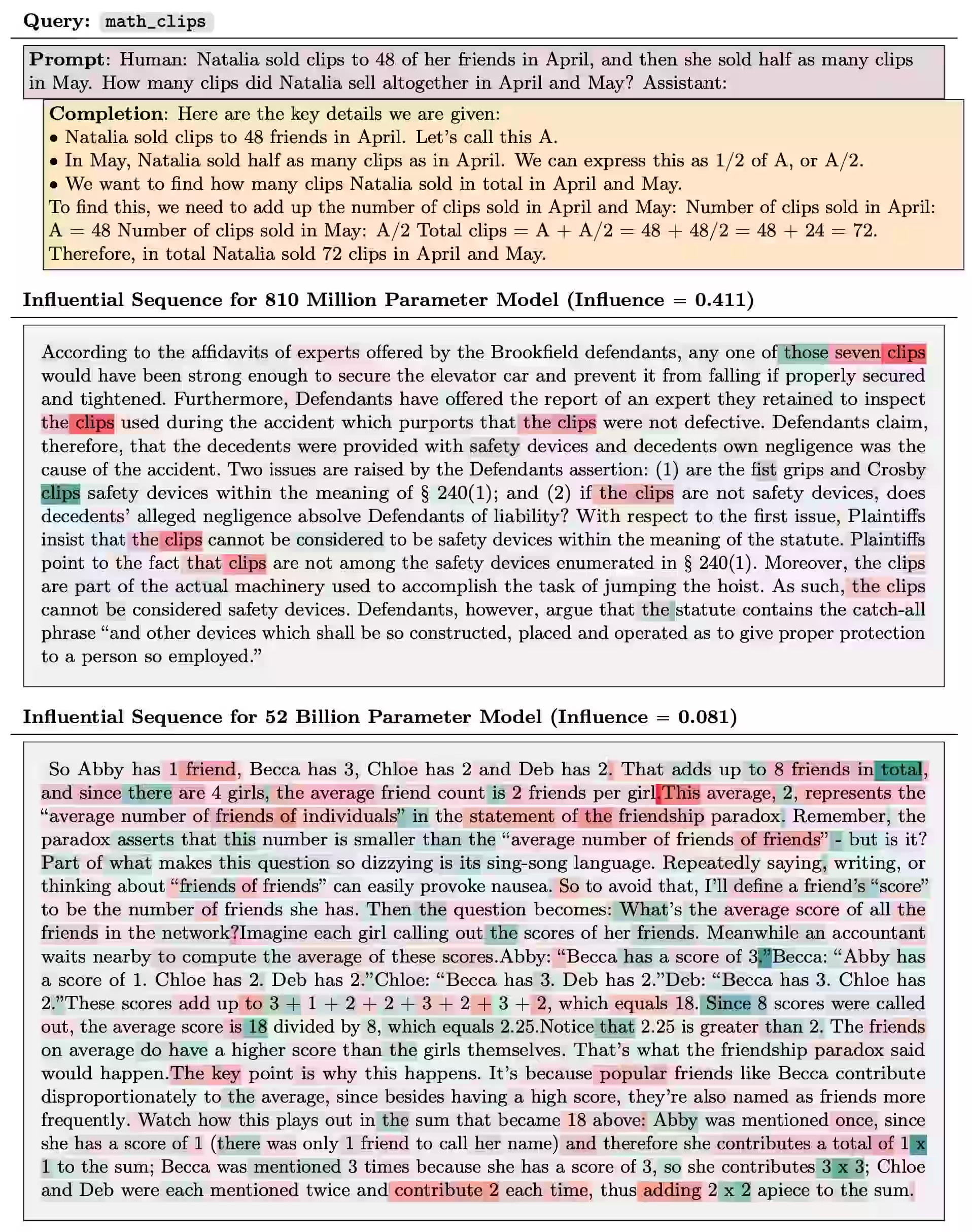

Can we explain the ethical algorithms that humans run, in the same way that we attempt to explain black-box ML algorithms?

I’m thinking of things like influence functions, which determine what examples we reason from, as in (Grosse et al. 2023), but also reasoning from features would be interesting.

Connection to Casuistry (Jonsen, Toulmin, and Toulmin 1988).

1 Disgust

Interesting starting point. See disgust.

2 Theory of moral sentiments

e.g. Haidt (2013).

3 References

Beauchamp, and Childress. 1979. “Principles of Biomedical Ethics.”

DellaPosta, Shi, and Macy. 2015. “Why Do Liberals Drink Lattes?” American Journal of Sociology.

Feinberg, Antonenko, Willer, et al. 2014. “Gut Check: Reappraisal of Disgust Helps Explain Liberal–Conservative Differences on Issues of Purity.” Emotion.

Grosse, Bae, Anil, et al. 2023. “Studying Large Language Model Generalization with Influence Functions.”

Haidt. 2013. The Righteous Mind: Why Good People Are Divided by Politics and Religion.

Inbar, Pizarro, and Bloom. 2009. “Conservatives Are More Easily Disgusted Than Liberals.” Cognition and Emotion.

Inbar, Pizarro, Iyer, et al. 2012. “Disgust Sensitivity, Political Conservatism, and Voting.” Social Psychological and Personality Science.

Jonsen, Toulmin, and Toulmin. 1988. The Abuse of Casuistry: A History of Moral Reasoning.

Moral Sentiments and Material Interests: The Foundations of Cooperation in Economic Life. 2006.

Storr. 2021. The Status Game: On Human Life and How to Play It.