Model interpretation and explanation

Colorising black boxes; mechanistic interpretability

September 1, 2016 — October 7, 2024

Suspiciously similar content

The meeting point of differential privacy, accountability, interpretability, the tank detection story, clever horses in machine learning: Can I explain why my model made this prediction?

Closely related: am I explaining the model so I can see if it is fair?

There is much work; I understand little of it at the moment, but I keep needing to refer to papers, so this notebook exists.

1 Impossibility results

One trick of “explainable” models is that they need to be simpler than the model they are explaining, or they would be just as incomprehensible. What is the actual trade-off? Can we sketch a Pareto frontier of interpretability and accuracy?

- Cassie Kozyrkov, Explainable AI won’t deliver. Here’s why.

- Wolters Kluwer, peeking into the black box a design perspective on comprehensible ai part 1

- Rudin (2019) argues that interpretable models can be worth the tradeoff relative to black boxes.

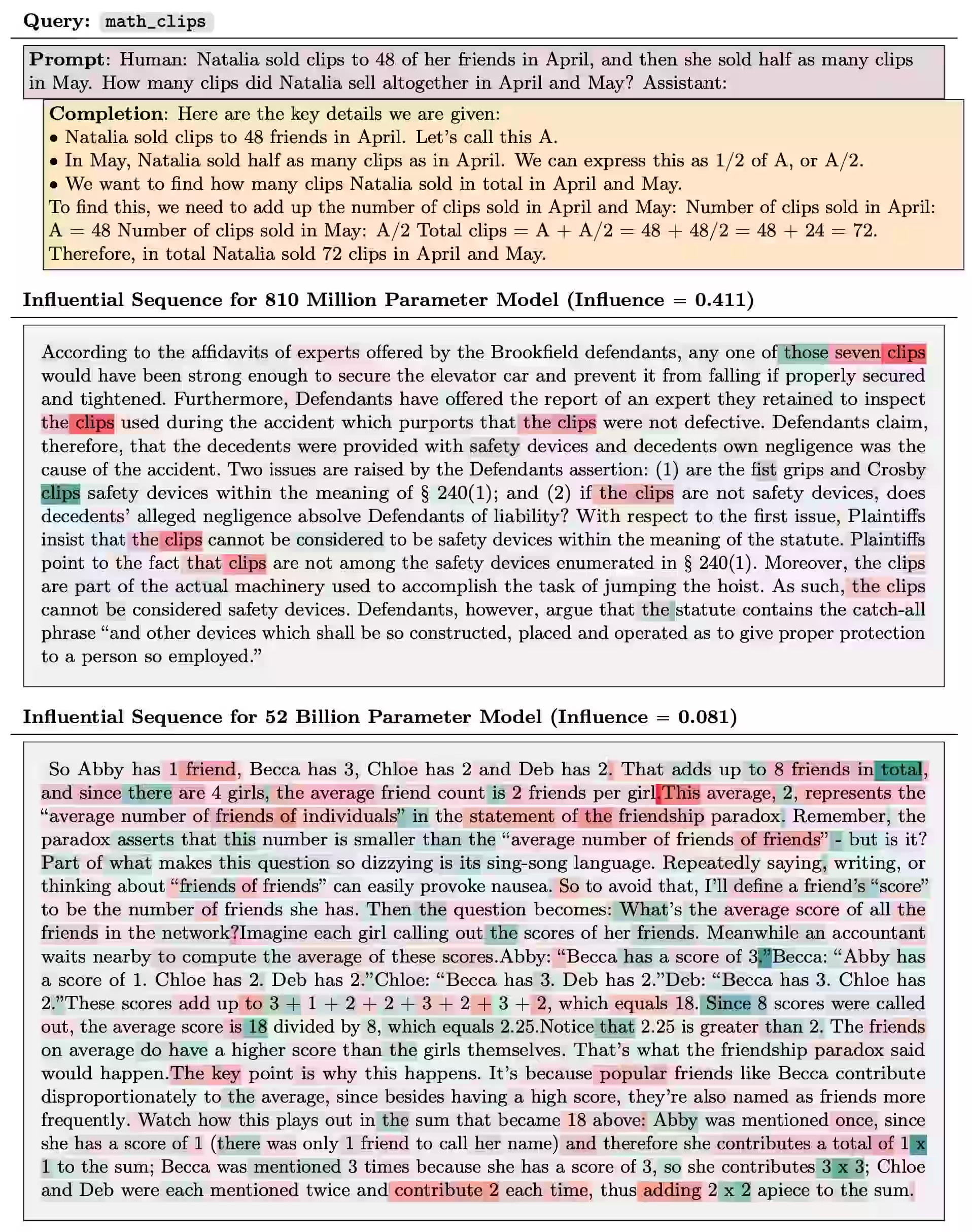

2 Influence functions

If we think about models as interpolators of memorised training data, then the idea of looking at influence functions from individual training data becomes powerful.

See Tracing Model Outputs to the Training Data (Grosse et al. 2023).

Integrated gradients seem to be in this family. See Ancona et al. (2017);Sundararajan, Taly, and Yan (2017). The Captum implementation seems neat.

3 Shapley values

Shapley values are a fairness technique, which turns out to be applicable to explanation. These are computationally intractable in general but there are some fashionable approximations in the form of SHAP values.

Not sure what else happens here, but see (Ghorbani and Zou 2019; Hama, Mase, and Owen 2022; Scott M. Lundberg et al. 2020; Scott M. Lundberg and Lee 2017) for application to both explanation of both data and features.

4 Linear explanations

The LIME lineage. A neat model that uses penalised regression to do local model explanations. (Ribeiro, Singh, and Guestrin 2016) See their blog post.

5 When do neurons mean something?

-

It would be very convenient if the individual neurons of artificial neural networks corresponded to cleanly interpretable features of the input. For example, in an “ideal” ImageNet classifier, each neuron would fire only in the presence of a specific visual feature, such as the color red, a left-facing curve, or a dog snout. Empirically, in models we have studied, some of the neurons do cleanly map to features. But it isn’t always the case that features correspond so cleanly to neurons, especially in large language models where it actually seems rare for neurons to correspond to clean features. This brings up many questions. Why is it that neurons sometimes align with features and sometimes don’t? Why do some models and tasks have many of these clean neurons, while they’re vanishingly rare in others?

6 By sparse autoencoder

Related, sparse autoencoder explanations. See Sparse Autoencoders for explanation.

7 By ablation

Because of its ubiquity in ML literature this has been de facto an admissible form of explanation. I am not a fan of how this is typically done. See ablation studies.

8 Incoming

Saphra, Interpretability Creationism

[…] Stochastic Gradient Descent is not literally biological evolution, but post-hoc analysis in machine learning has a lot in common with scientific approaches in biology, and likewise often requires an understanding of the origin of model behaviour. Therefore, the following holds whether looking at parasitic brooding behaviour or at the inner representations of a neural network: if we do not consider how a system develops, it is difficult to distinguish a pleasing story from a useful analysis. In this piece, I will discuss the tendency towards “interpretability creationism” – interpretability methods that only look at the final state of the model and ignore its evolution over the course of training—and propose a focus on the training process to supplement interpretability research.

Good idea, or too ad hominem?

-

Interpretable features tend to arise (at a given level of abstraction) if and only if the training distribution is diverse enough (at that level of abstraction).

Christoph Molnar, Interpretable Machine Learning “A Guide for Making Black Box Models Explainable”

George Hosu, A Parable Of Explainability

Connection to Gödel: Mathematical paradoxes demonstrate the limits of AI (Colbrook, Antun, and Hansen 2022; Heaven 2019)

The deep dream “activation maximisation” images could sort of be classified as a type of model explanation, e.g. Multifaceted neuron visualization (Nguyen, Yosinski, and Clune 2016)

Belatedly I notice that the Data Skeptic podcast did a whole season on interpretability

How explainable artificial intelligence can help humans innovate

Are Model Explanations Useful in Practice? Rethinking How to Support Human-ML Interactions.

Existing XAI methods are not useful for decision-making. Presenting humans with popular, general-purpose XAI methods does not improve their performance on real-world use cases that motivated the development of these methods. Our negative findings align with those of contemporaneous works.

Neuronpedia is “an open platform for interpretability research. Explore, steer, and experiment on Al models.”