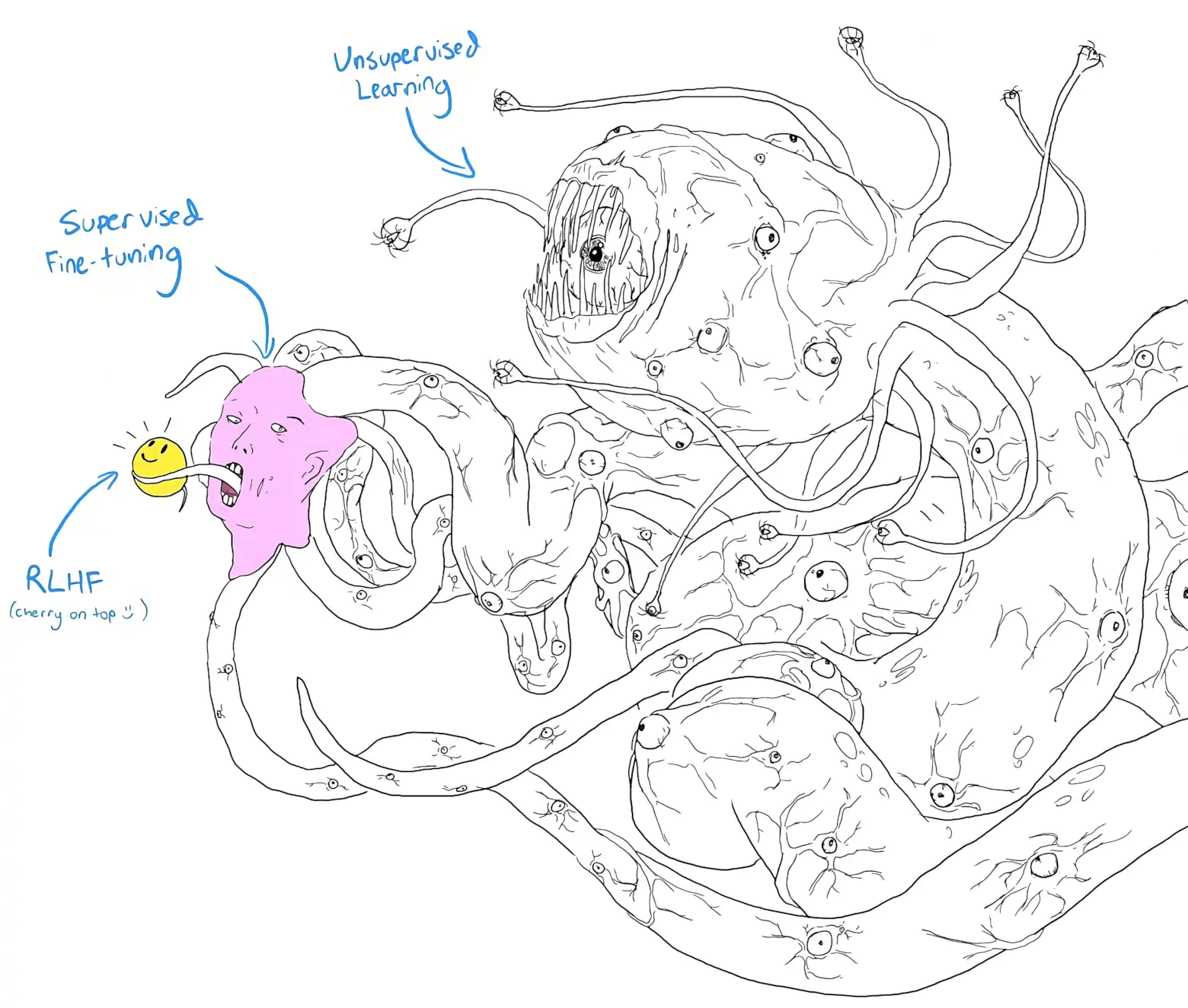

Transformer networks

Baby’s first foundation model

December 20, 2017 — April 1, 2025

Suspiciously similar content

Transformers are big attention networks with some extra tricks — self attention, and usually a positional encoding as well.

I am no expert. Here are some good blog posts explaining everything, for my reference, but I will not write yet another one. This is a fast-moving area and I am not keeping track of it, so if you are on this page looking for guidance you are already in trouble.

These networks are massive (heh) in natural language processing and a contender in the race to achieve generally superhuman capability.

A (the?) key feature of such networks seems to be that they can be made extremely large, and extremely capable, but still remain trainable. This leads to interesting scaling laws. The scaling dynamics have let to fascinating pricing and upended the bitter lesson dynamics.

1 Introductions

So many.

TODO: rank in terms of lay-person-friendliness.

“Attention”, “Transformers”, in Neural Network “Large Language Models” I quite like Cosma’s characteristically idiosyncratic way of learning and re-deriving things. I found his contrarian statistician’s take on the transformer architecture to be enlightening.

[2502.17814] An Overview of Large Language Models for Statisticians

Compact precise definition of a transformer function – foreXiv

Jay Alammar’s Illustrated Transformer series is good.

Lilian Weng, Large Transformer Model Inference Optimization

Lilian Weng, The Transformer Family Version 2.0

nostalgebraist, An exciting new paper on neural language models

John Thickstun, The Transformer Model in Equations

Large language models, explained with a minimum of math and jargon

Large language models, explained with a minimum of math and jargon

A good paper read is Yannic Kilcher’s.

Xavier Amatriain, Transformer models: an introduction and catalog — 2023 Edition

Noam Shazeer’s Shape Suffixes post implicitly makes the case that transformers are simply a confusing way of smashing tensors together.

2 Power of

Transformers are pretty good at weird stuff, e.g. automata — see Unveiling Transformers with LEGO (Y. Zhang et al. 2022).

How about Bayesian inference? (Müller et al. 2022)

Can they be an engine of intelligence? Controversial — see the Stochastic Parrots paper (Bender et al. 2021), and the entire internet commentariat from November 2022 onwards.

What do they do in society? Who knows, but see AI democratization and AI economics.

3 As set functions

Transformers are neural set functions (!).

4 As recurrent state

5 For forecasting of non-linguistic material

6 Practicalities

For you and me, see AI democratization.

7 Embedding vector databases

8 Incoming

LMQL: Programming Large Language Models: “LMQL is a programming language for language model interaction.” (Beurer-Kellner, Fischer, and Vechev 2022)

LMQL generalises natural language prompting, making it more expressive while remaining accessible. For this, LMQL builds on top of Python, allowing users to express natural language prompts that also contain code. The resulting queries can be directly executed on language models like OpenAI’s GPT models. Fixed answer templates and intermediate instructions allow the user to steer the LLM’s reasoning process.

-

TL;DR — In-context learning is a mysterious emergent behaviour in large language models (LMs) where the LM performs a task just by conditioning on input-output examples, without optimising any parameters. In this post, we provide a Bayesian inference framework for understanding in-context learning as “locating” latent concepts the LM has acquired from pretraining data. This suggests that all components of the prompt (inputs, outputs, formatting, and the input-output mapping) can provide information for inferring the latent concept. We connect this framework to empirical evidence where in-context learning still works when provided training examples with random outputs. While output randomisation cripples traditional supervised learning algorithms, it only removes one source of information for Bayesian inference (the input-output mapping).

Large Language Models as General Pattern Machines

We observe that pre-trained large language models (LLMs) are capable of autoregressively completing complex token sequences—from arbitrary ones procedurally generated by probabilistic context-free grammars (PCFG), to more rich spatial patterns found in the Abstract Reasoning Corpus (ARC), a general AI benchmark, prompted in the style of ASCII art. Surprisingly, pattern completion proficiency can be partially retained even when the sequences are expressed using tokens randomly sampled from the vocabulary. These results suggest that without any additional training, LLMs can serve as general sequence modellers, driven by in-context learning. In this work, we investigate how these zero-shot capabilities may be applied to problems in robotics—from extrapolating sequences of numbers that represent states over time to complete simple motions, to least-to-most prompting of reward-conditioned trajectories that can discover and represent closed-loop policies (e.g., a stabilising controller for CartPole). While difficult to deploy today for real systems due to latency, context size limitations, and compute costs, the approach of using LLMs to drive low-level control may provide an exciting glimpse into how the patterns among words could be transferred to actions.

karpathy/nanoGPT: The simplest, fastest repository for training/finetuning medium-sized GPTs.