Differentiable learning of automata

October 14, 2016 — March 31, 2025

Suspiciously similar content

Learning stack machines, random access machines, nested hierarchical parsing machines, Turing machines, and whatever other automata-with-memory you wish, from data. In other words, teaching computers to program themselves via a deep learning formalism.

This is an obvious idea, indeed, one of the original ideas from the GOFAI days. How to make it work in NNs is not obvious, but there are some charming toy examples. Obviously, a hypothetical superhuman Artificial General Intelligence would be good at handling computer-science problems.

Directly learning automata is not the absolute hippest research area right now, though, on account of being hard in general. Some progress has been made. I feel that most of the hyped research that looks like differentiable computer learning is in the slightly-better-contained area of reinforcement learning where more progress can be made, or in the hot area of large LLMs which are harder to explain but solve the same kind of problems while looking different inside.

Related: grammatical inference, memory machines, computational complexity results in NNs, reasoning with LLMs.

1 Differentiable Cellular Automata

Mordvintsev et al. (2020) is a fun paper. They improve upon boring old-school cellular automata in several ways (not all of which are completely novel, but seem to be a novel combination.)

- Continuous states whose rules can be differentiably learned

- Use of Sobel filters for CA based on local gradients

- Framing the problem as “designing attractors of a dynamical system”

- Clever use of noise in the training.

I am instinctively annoyed by the unfashionable loss function which is not any kind, e.g. cool optimal transport metric, which is what this problem looks like it wants to me. But hey, it works so don’t listen to me about that. Logical extensions such as creating a model which can produce different patterns parametrically and interpolate between them also seem to leap out at me. I am also curious about an information bottleneck analysis which gives us restrictions on what patterns can be learned.

More general models of morphogenesis are out there, obviously. I will not even touch upon how this happens in real creatures as opposed to fake emoji monsters for now.

Jonathan Whitaker in Fun With Neural Cellular Automata shows how to make steerable automata (Mordvintsev and Niklasson 2021; Randazzo, Mordvintsev, and Fouts 2023) which ends up being like steerable diffusions.

TBD: connection to bio computing, and the particular special case, models of pattern formation.

In Differentiable Logic CA: from Game of Life to Pattern Generation they push this all the way to a differentiable version of Conway’s Game of Life, and thus credible Turing completeness, by working with discrete gradients.

2 Are LLMs even Turing complete, bro?

3 Incoming

Blazek claims his neural networks implement predicate logic directly and yet are tractable which would be interesting to look into (Blazek and Lin 2021, 2020; Blazek, Venkatesh, and Lin 2021).

Google branded: Differentiable neural computers.

Christopher Olah’s Characteristically pedagogic intro.

Adrian Colyer’s introduction to neural Turing machines.

Andrej Karpathy’s memory machine list.

Facebook’s GTN:

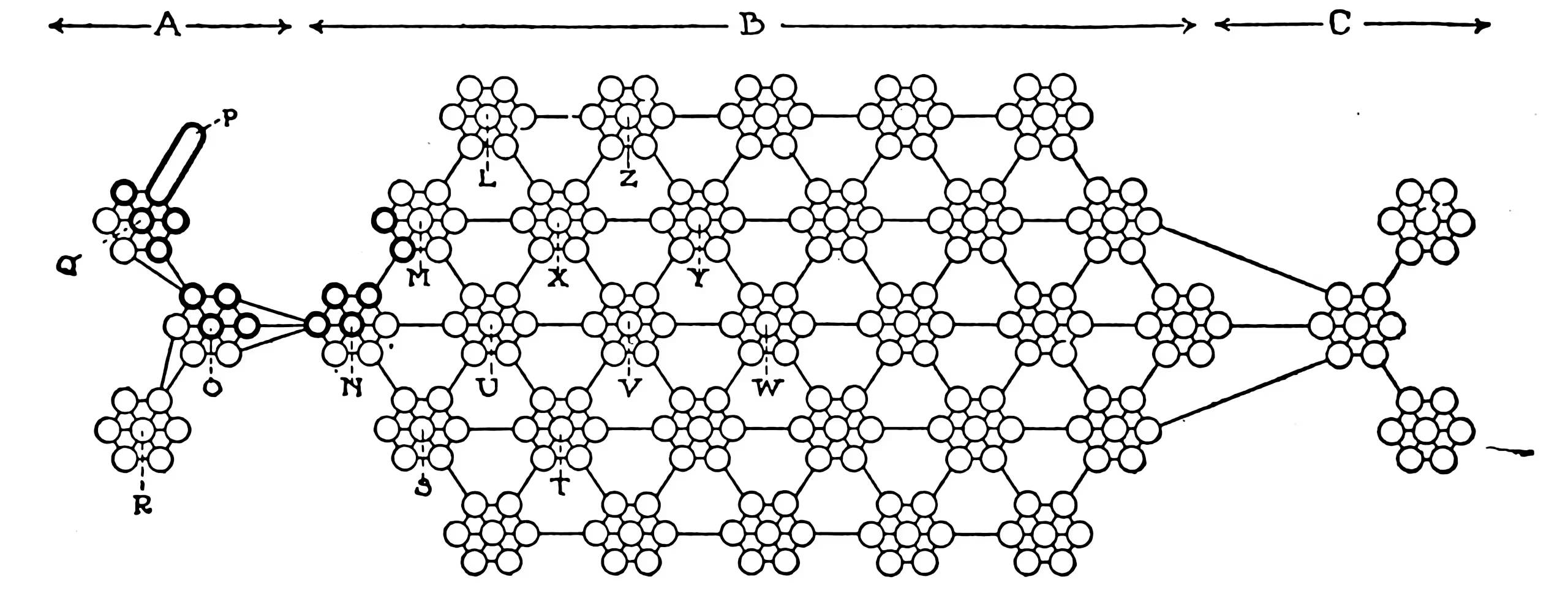

GTN is an open source framework for automatic differentiation with a powerful, expressive type of graph called weighted finite-state transducers (WFSTs). Just as PyTorch provides a framework for automatic differentiation with tensors, GTN provides such a framework for WFSTs. AI researchers and engineers can use GTN to more effectively train graph-based machine learning models.