Deep learning as a dynamical system

August 13, 2018 — October 30, 2022

Suspiciously similar content

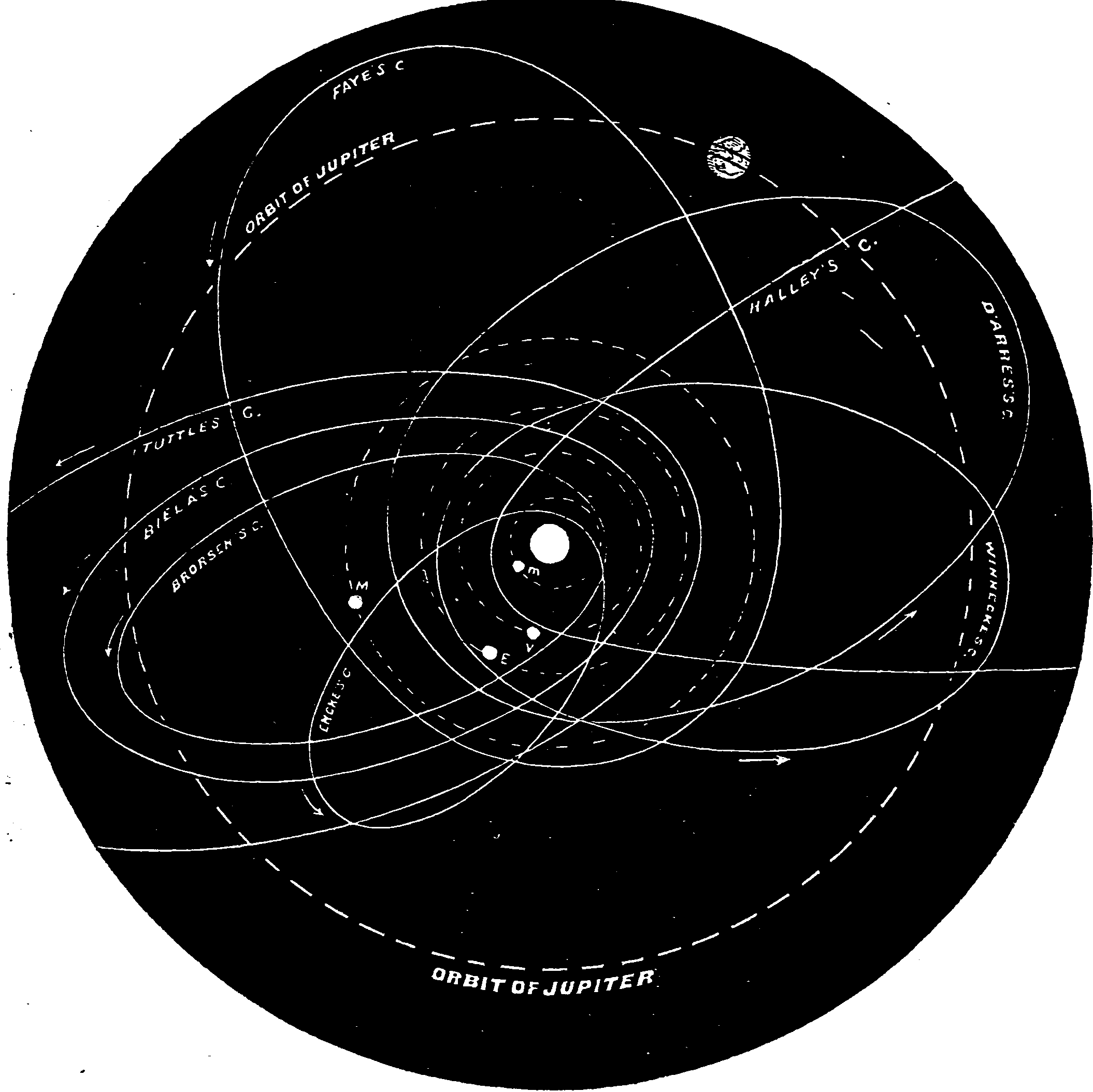

A thread of thought within neural network learning research tries to render the learning of prediction functions tractable, or comprehensible, by considering said networks as dynamical systems. This leads to some nice insights and allows us to use the various tools of dynamical systems to analyse neural networks, which does some good stuff.

I’ve been interested in this since seeing the (Haber and Ruthotto 2018) paper, but it got a kick when T. Q. Chen et al. (2018) won the prize at NeurIPS for directly learning the ODEs themselves, through related methods, which makes the whole thing look more useful.

Interesting connections here — we can also think about the relationships between stability and ergodicity, and criticality. Different: input-stability in learning.

1 Convnets/Resnets as discrete PDE approximations

Arguing that neural networks are, in the limit, approximants to quadrature solutions of certain ODEs can provide a new perspective on how these things work and also suggest certain ODE tricks might be imported. This is mostly what Haber and Ruthotto et al. do. “Stability of training” is a useful outcome here, guaranteeing that gradient signals are available by ensuring the network preserves energy as the energy propagates through layers (Haber and Ruthotto 2018; Haber et al. 2017; Chang et al. 2018; Ruthotto and Haber 2020), which we can interpret as stability of the implied PDE approximator itself. They mean stability in the sense of energy-preserving operators or stability in linear systems.

Another fun trick from this toolbox is the ability to interpolate and discretize resnets, re-sampling the layers and weights themselves, by working out a net which solves the same discretized SDE. This essentially, AFAICT, allows one to upscale and downscale nets and/or the training data through their infinite-resolution limits. That sounds cool but I have not seen so much of it. Is the complexity in practice worth it?

Combining depth and time, we get Gu et al. (2021).

2 How much energy do I lose in other networks?

Also energy-conservation, but without the PDE structure implied, Wiatowski, Grohs, and Bölcskei (2018). Or maybe a PDE structure is still implied but I am too dense to see it.

3 Learning forward predictions with energy conservation

Slightly different again, AFAICT, because now we are thinking about predicting dynamics, rather than the dynamics of the neural network. The problem looks like it might be closely related, though, because we are still demanding an energy conservation of sorts between input and output.

4 This sounds like chaos theory

Measure-preserving systems? Complex dynamics? I cannot guarantee that chaotic dynamics are not involved; we should probably check that out. See edge of chaos.

5 Learning parameters by filtering

6 In reservoir computing

See reservoir computing.