Ergodicity and mixing

Things that probably happen eventually on average

October 17, 2011 — February 13, 2022

Suspiciously similar content

🏗

- The World’s Simplest Ergodic Theorem

- Von Neumann and Birkhoff’s Ergodic Theorems

- The difference between statistical ensembles and sample spaces: Mehmet Süzen, Alignment between statistical mechanics and probability theory

Relevance to actual stochastic processes and dynamical systems, especially linear and non-linear system identification.

Keywords to look up:

- probability-free ergodicity

- Birkhoff ergodic theorem

- Frobenius-Perron operator

- Quasicompactness, correlation decay

- C&C CLT for Markov chains — Nagaev

Not much material, but please see learning theory for dependent data for some interesting categorisations of mixing and transcendence of miscellaneous mixing conditions for statistical estimators.

My main interest is the following 4-stages-of-grief kind of setup.

- Often I can prove that I can learn a thing from my data if it is stationary.

- But I rarely have stationarity, so at least showing the estimator is ergodic might be more useful, which would follow from some appropriate mixing conditions which do not necessarily assume stationarity.

- Except that often these theorems are hard to show, or estimate, or require knowing the parameters in question, and maybe I might suspect that showing some kind of partial identifiability might be more what I need.

- Furthermore, I usually would prefer a finite-sample result instead of some asymptotic guarantee. Sometimes I can get those from learning theory for dependent data.

That last one is TBC.

1 Coupling from the past

Dan Piponi explains coupling from the past via functional programming for Markov chains.

2 Mixing zoo

A recommended partial overview is Bradley (2005). 🏗

2.1 β-mixing

🏗

2.2 ϕ-mixing

🏗

2.3 Sequential Rademacher complexity

🏗

3 Lyapunov exponents

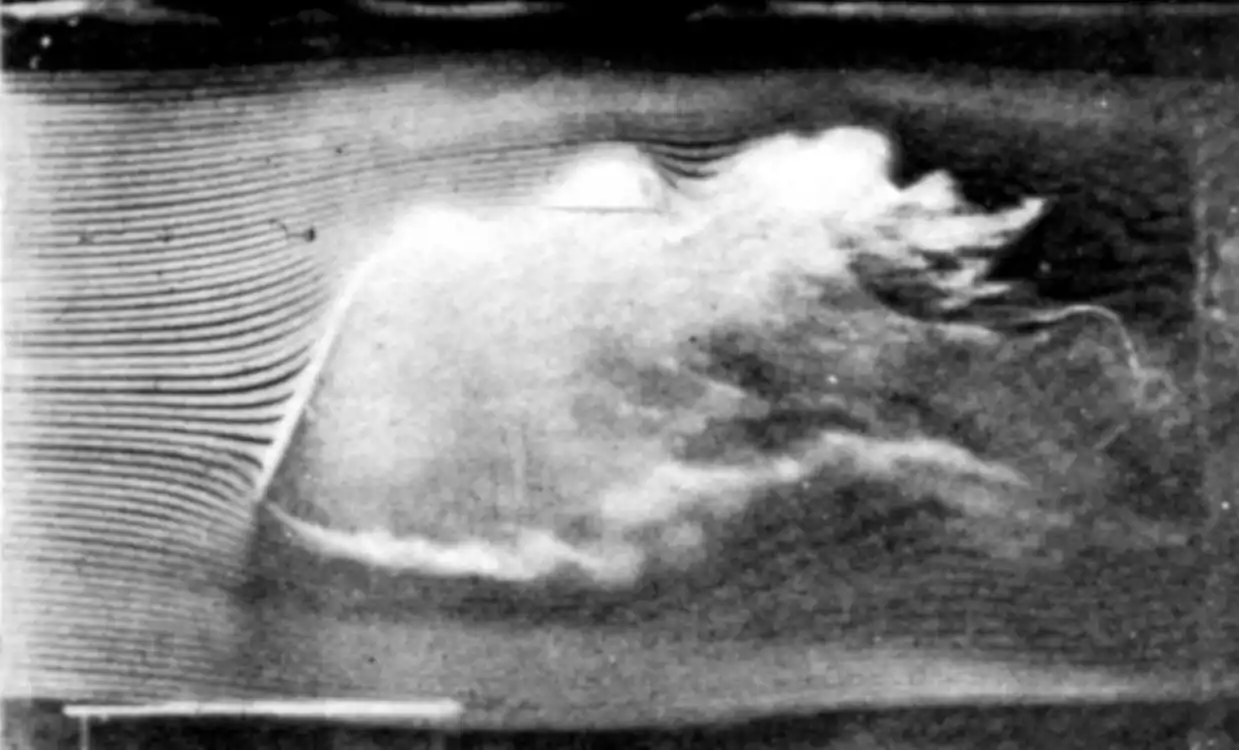

Chaotic systems are unpredictable. Or rather chaotic systems are not deterministically predictable in the long run. You can make predictions if you weaken one of these requirements. You can make deterministic predictions in the short run, or statistical predictions in the long run. Lyapunov exponents are a way to measure how quickly the short run turns into the long run.