Clickbait bandit problems

On using computers to program humans

May 18, 2017 — September 28, 2023

Suspiciously similar content

The science of treating consumers as near-passive objects of surveillance and control. Skinnerian human behaviour control, limbic consumerism. This is a powerful strategy. Relying on people’s rationality and/or agency to get things done has a less auspicious track record.

1 On designing addiction

Recommended reading before blaming someone for having no attention span. Michael Schulson, if the internet is addictive, why don’t we regulate it?

As a consultant to Silicon Valley startups, Eyal helps his clients mimic what he calls the ‘narcotic-like properties’ of sites such as Facebook and Pinterest. His goal, Eyal told Business Insider, is to get users ‘continuing through the same basic cycle. Forever and ever.’

[…] For a tech company in the attention economy, the longer you’re engaged by variable rewards, the more time you spend online, and the more money they make through ad revenue.

Hooked: how pokies are designed to be addictive is a datavisualisation of poker machines, based on Addiction by Design (Schüll 2014). See also How electronic gambling machines work, by Charles Livingstone.

People who spend hours and hundreds on machine games are not after big wins, but escape. They go to machines to escape from unpredictable life into the “zone.”

The primary objective that machine gambling addicts have is not to win, but to stay in the zone. The zone is a state that suspends real life, and reduces the world to the screen and the buttons of the machine. Entering the zone is easiest when gamblers can get into a rhythm. Anything that disrupts the rhythm becomes an annoyance. This is true even when the disruption is winning the game.

They also observe something interesting about the limitations of designed addiction:

despite following a lot of the same strategies that gambling machine designers did, those app creators never did create an army of self-improvement addicts. I haven’t heard any tales of someone losing his job because he was too busy getting more steps with his Fitbit, neglecting her marriage because she was too busy learning a new language on Duolingo, or dropping out of school to make more time for Khan Academy. Why is that?

François Chollet argues

…social network companies can simultaneously measure everything about us, and control the information we consume. And that’s an accelerating trend. When you have access to both perception and action, you’re looking at an AI problem. You can start establishing an optimization loop for human behaviour, in which you observe the current state of your targets and keep tuning what information you feed them, until you start observing the opinions and behaviours you wanted to see. A large subset of the field of AI — in particular “reinforcement learning” — is about developing algorithms to solve such optimization problems as efficiently as possible, to close the loop and achieve full control of the target at hand — in this case, us. By moving our lives to the digital realm, we become vulnerable to that which rules it — AI algorithms.

The TikTokWar examines an interesting escalation:

This is where it is important to understand the history of ByteDance, TikTok’s Chinese owner. ByteDance’s breakthrough product was a news app called TouTiao; whereas Facebook evolved from being primarily a social network to an algorithmic feed, TouTiao was about the feed and the algorithm from the beginning. The first time a user opened TouTiao, the news might be rather generic, but every scroll, every linger over a story, every click, was fed into a feedback loop that refined what it was the user saw.

Meanwhile all of that data fed back into TouTiao’s larger machine learning processes, which effectively ran billions of A/B tests a day on content of all types, cross-referenced against all of the user data it could collect. Soon the app was indispensable to its users, able to anticipate the news they cared about with nary a friend recommendation in sight. That was definitely more of a feature than a bug in China, where any information service was subject to not just overt government censorship, but also an expectation of self-censorship; all the better to control everything that end users saw, without the messiness of users explicitly recommending content themselves.

A cynic might say “soon you will look back fondly on when the fake news had a personal touch”.

Rob Horning, I write the songs:

Apologists for TikTok often tout how fun it is. Whenever I see something described as “fun,” I reach for my Baudrillard to trot out his passages about the “fun morality” — the compulsory nature of enjoyment in a consumer society. Fun, as I interpret the word, is not just a synonym for having a good time; […] It indexes the degree to which our capacity to experience pleasure has been subsumed by consumerism. That is, “fun” marks how effectively we’ve been produced as an audience.

“Fun” often evokes the kinds of pleasures typified by the “experience economy,” in which retail is conflated with tourism, saturated with an “authenticity” that deconstructs itself. It also includes media “experiences” as well — on-screen entertainment, often presented serially in feeds. (Channel flipping and “doomscrolling” are fun’s inverted cousins.) It conceives of pleasure as a commodity, as an end that can be abstracted from its means. It presumes — or rather prescribes — a sense of time as a uniform emptiness, a blankness that must be filled with various prefabricated chunks of spent attention. “Fun” is when we defeat boredom; being bored by default is fun’s prerequisite.

See also fun.

Louise Perry, How OnlyFans became the porn industry’s great lockdown winner – and at what cost:

The historian David Courtwright has coined the term “limbic capitalism” to describe

a technologically advanced but socially regressive business system in which global industries, often with the help of complicit governments and criminal organisations, encourage excessive consumption and addiction. They do so by targeting the limbic system, the part of the brain responsible for feeling…

Limbic capitalism is the reason the most successful apps are brightly coloured like fresh fruit and glint like water. Our primitive brains helplessly seek out the stimuli we have evolved to be attracted to, and the beneficiaries of limbic capitalism have become wise to these instincts, learning over time how best to capture them.

Hmm. I wonder if that book is any good? I have not read it.

At the very least, I have notes about nomenclature. limbic capitalism feels slightly off. Should it not be limbic consumerism? The OnlyFans creators at least seem to be mostly low in capital.

And if we were going to lean into capitalism, then we should have gone for the punchier fapitalism.

C&C superstimuli.

2 Skinnerian behaviour control

What I’m specifically interested in is the use of, e.g. bandit models to model consumers and their interactions, because it’s especially rich in metaphor. This is probably a superset of the gamification idea; in that area there are a particular subset of ways to addict people that we foreground.

The “bandit problems” phrase comes, by the way, from an extension of the “one armed bandit”, the poker machine, into a mathematical model for exploring the world by pulling on the arms of a poker machine. There is a pleasing symmetry, in that modern poker machines, and indeed the internet in general, model the customer as a metaphorical poker machine upon whose arm they pull to get a reward, and that this reward is addicting the customer to pulling on the arms of their literal poker machine. It’s a two-way battle of algorithms, but one side does not update its learning algorithms based on the latest research, or have nearly the same data set.

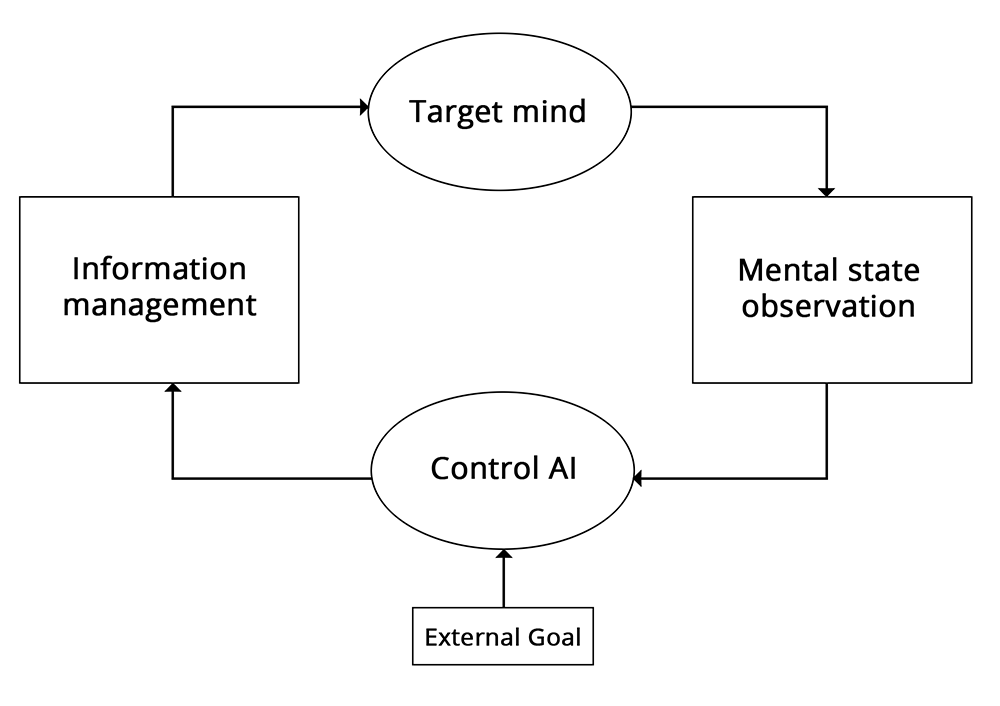

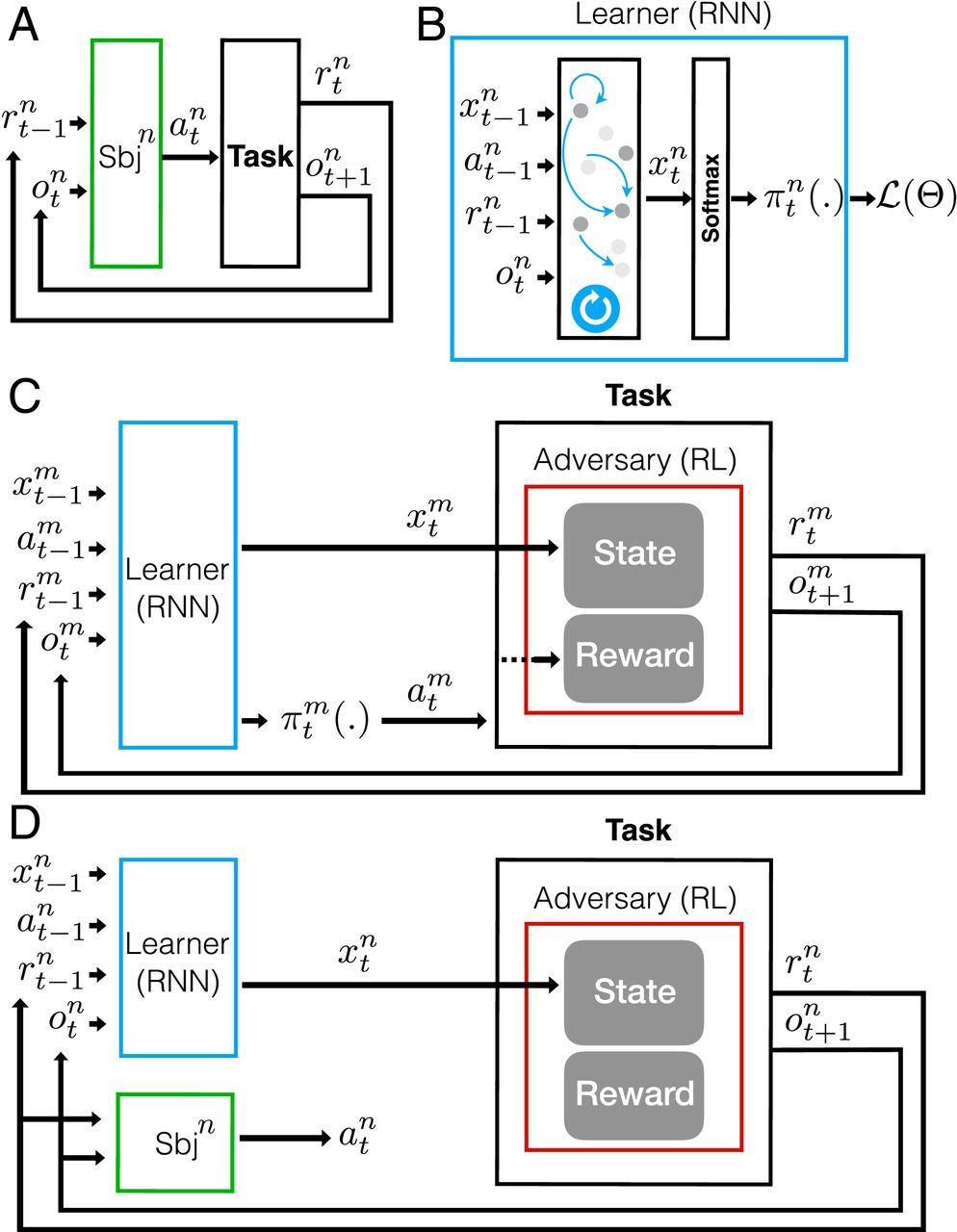

See Dezfouli, Nock, and Dayan (2020), (leveraging, I think, Dezfouli, Ashtiani, et al. (2019)) wherein the following diagram is explained:

3 Predictive coding hacks

Does predictive coding give us any insight into this? The Addiction by design method is suggestive that controlling the unpredictability facing a person is a great way to influence what they will learn to do. TBC.

4 Recommender systems

Recommender systems are these days regarded as a key ingredient in weaponized social media. e.g. YouTube is experimenting with ways to make its algorithm even more addictive.

China moved toward a clamp down on recommendation algorithms.

What’s new: China’s internet regulatory agency proposed rules that include banning algorithms that spread disinformation, threaten national security, or encourage addictive behaviour.

What it says: The plan by the Cyberspace Administration of China (CAC) broadly calls for recommendation engines to uphold China’s social order and “promote socialist core values”. The public has until September 26, 2021, to offer feedback. It’s not clear when the rules would take effect. Under the plan:

- Recommendation algorithms would not be allowed to encourage binges or exploit users’ behaviour by, say, raising prices of goods they buy often.

- Content platforms would be required to tell users about their algorithms’ operating principles and audit them regularly to make sure they comply with CAC regulations. They would also have to allow users to disable automated recommendations easily.

- Algorithms that make false user accounts, generate disinformation, or violate an individual’s rights would be banned.

- Platforms would have to obtain approval before deploying recommendation algorithms capable of swaying public sentiment.

Behind the news: China is not alone in its national effort to rein in the influence of AI.

- The European Union released draft regulations that would ban or tightly restrict social scoring systems, real-time face recognition, and algorithms engineered to manipulate behaviour.

- The Algorithmic Accountability Act, which is stalled in the U.S. Congress, would require companies to perform risk assessments before deploying systems that could spread disinformation or perpetuate social biases.

Why it matters: Recommendation algorithms can enable social media addiction, spread disinformation, and amplify extreme views.

We’re thinking: There’s a delicate balance between protecting the rights of consumers and limiting the freedoms of content providers who rely on platforms to get their message out. The AI community can help with the challenge of formulating thoughtful regulations.

6 Incoming

Compulsion loop reviews some interesting links and articles Baliki et al. (2013)

Adam Mastroianni, Against All Applications

That’s not even the worst part. The worst part is the subtle, craven way applications irradiate us, mutating our relationships into connections, our passions into activities, and our lives into our track record. Instagram invites you to stand amidst beloved friends in a beautiful place and ask yourself, if we took a selfie right now would it get lots of likes? Applications do the same for your whole life: would this look good on my resume? If you reward people for lives that look good on paper, you’ll get lots of good-looking paper and lots of miserable lives.

5 Social addiction

See media virality for analysis of memetic contagion online.