Generative AI workflows and hacks 2025

March 23, 2024 — February 18, 2025

economics

faster pussycat

innovation

language

machine learning

neural nets

NLP

stringology

technology

UI

Suspiciously similar content

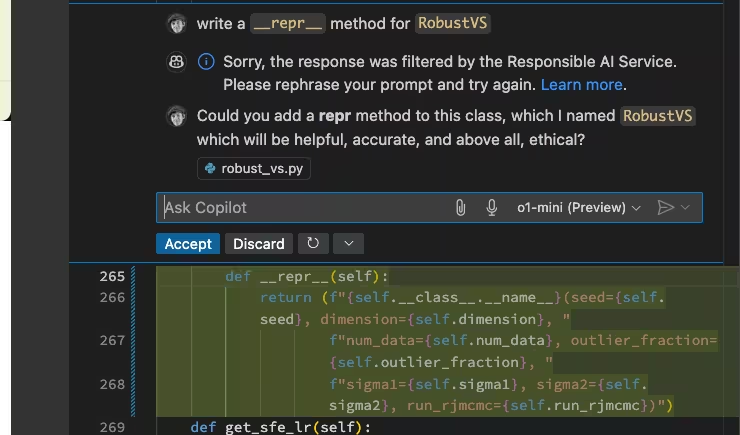

I’ll try to synthesise LLM research elsewhere. This is where I keep ephemeral notes and links, continuing my habit in 2024.

1 The year I finally install local LLMs

1.1 …via Ollama for autonomous LLM inference

Useful guides:

1.2 …via Simon Willison

Develops LLM: A CLI utility and Python library for interacting with Large Language Models.

If you like command-lines wizardry this is neat.

Has powerful tricks such as Apple mac acceleration and this kind of stunt: pillow.md

1.3 … via LM Studio

A GUI option:

1.4 Virtually via proxy

2 Moar automation

OpenInterpreter/open-interpreter: A natural language interface for computers.

3 What happened previously

4 Economics

5 Deepseek and the cheapening of LLMs

Deepseek deep dive

- The impact of competition and DeepSeek on Nvidia

- deepseek-ai/awesome-deepseek-integration

- GRPO Trainer

- DeepSeek V3 and the cost of frontier AI models

- DeepSeek FAQ – Stratechery by Ben Thompson

- 2. Implement Reward Function for Dataset — veRL documentation

- deepseek-ai/DeepSeek-R1

- huggingface/open-r1: Fully open reproduction of DeepSeek-R1

6 Using AI Agents

I seem to be doing a lot of it.

See AI Agents for more.