Uncertainty quantification

December 26, 2016 — June 6, 2024

Suspiciously similar content

Using machine learning to make predictions, with a measure of the confidence of those predictions.

Closely related, perhaps identical: sensitivity analysis.

1 Taxonomy

Should clarify. TBD. Here is a recent reference on the theme: Kendall and Gal (2017) This extricates aleatoric and epistemic uncertainty. Also to mention, model uncertainty.

2 DUQ networks

3 Bayes

Bayes methods have some ideas of uncertainty baked in. You can get some way with, e.g., Gaussian process regression, or probabilistic NNs.

4 Physical model calibration

PEST, PEST++, and pyemu are some integrated systems for uncertainty quantification that use some weird terminology, such as FOSM (First-order-second-moment) models. I think these might be best considered as inverse problem solvers, and the uncertainty quantification is a side effect of the inversion.

5 Conformal prediction

See conformal prediction.

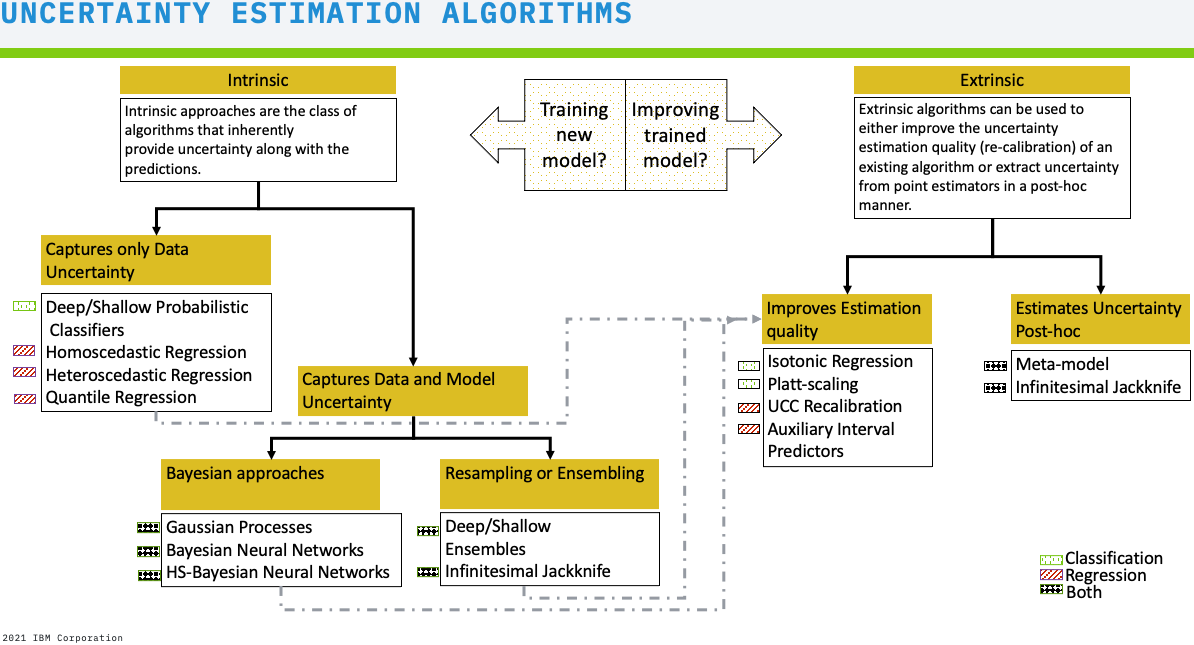

6 Uncertainty Quantification 360

IBM’s Uncertainty Quantification 360 toolkit provides a summary of popular generic methods:

- Auxiliary Interval Predictor

Use an auxiliary model to improve the calibration of UQ generated by the original model.

- Blackbox Metamodel Classification

Extract confidence scores from trained black-box classification models using a meta-model.

- Blackbox Metamodel Regression

Extract prediction intervals from trained black-box regression models using a meta-model.

- Classification Calibration

Post-hoc calibration of classification models using Isotonic Regression and Platt Scaling.

- Heteroscedastic Regression

- Homoscedastic Gaussian Process Regression

- Horseshoe BNN classification

- Horseshoe BNN regression

- Infinitesimal Jackknife

- Quantile Regression

- UCC Recalibration

They provide guidance on method selection in the manual: