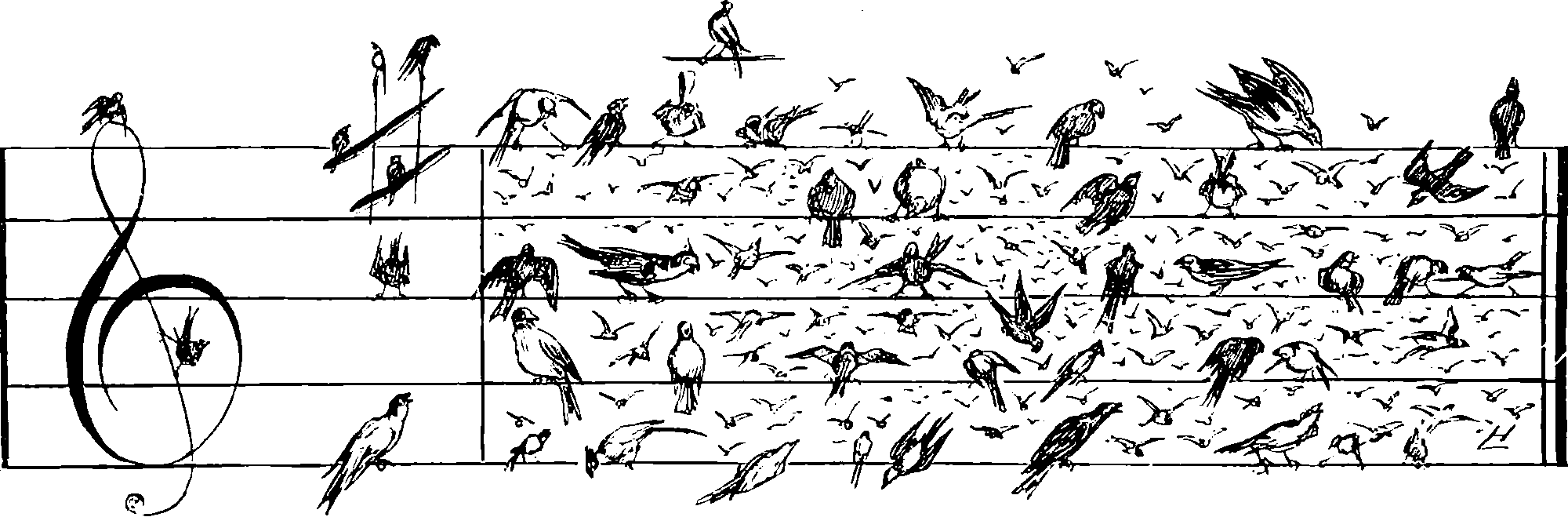

Audio source separation

November 4, 2019 — November 26, 2019

Suspiciously similar content

Decomposing audio into discrete sources, especially commercial tracks into stems. This is in large part a problem of data acquisition since artists do not usually release unmixed versions of their tracks.

The taxonomy here comes from Jordi Pons’ tutorial Waveform-based music processing with deep learning.

1 Neural approaches

In the time domain, Facebook’s demucs gets startlingly good performance on MusDB (startlingly good in that, if I have understood correctly, they train only on MuseDB which is a small data set compared to what the big players such as Spotify have access to, so they must have done well with good priors).

In the spectral domain, Deezer has released Spleeter (Hennequin et al. 2019)

Spleeter is the Deezer source separation library with pretrained models written in Python and uses Tensorflow. It makes it easy to train source separation models (assuming you have a dataset of isolated sources), and provides already trained state-of-the-art models for performing various flavours of separation:

- Vocals (singing voice) / accompaniment separation (2 stems)

- Vocals / drums / bass / other separation (4 stems)

- Vocals / drums / bass / piano / other separation (5 stems)

They are competing with open unmix, (Stöter et al. 2019) which also looks neat and has a live demo.

Wave-U-Net architectures seem popular if one wants to DIY.

GANs seem natural here, although most methods are supervised, or better, a probabilistic method.

1.1 Non-negative matrix factorisation approaches

Factorise the spectrogram! Authors such as (T. Virtanen 2007; Bertin, Badeau, and Vincent 2010; Vincent, Bertin, and Badeau 2008; Févotte, Bertin, and Durrieu 2008; Smaragdis 2004) popularised using non-negative matrix factorisations to identify the “activations” of power spectrograms for music analysis. It didn’t take long for this to be used in resynthesis tasks, by e.g. Aarabi and Peeters (2018), Buch, Quinton, and Sturm (2017) (source, site), Driedger and Pratzlich (2015) (site), (Hoffman, Blei, and Cook 2010). Of course, these methods leave you with a phase retrieval problem. These work really well in resynthesis, where you do not care so much about audio bleed.

UNMIXER (Smith, Kawasaki, and Goto 2019) is a nifty online interface to loop decomposition in this framework.

1.2 Harmonic-percussive source separation

Harmonic Percussive separation needs explanation. 🚧TODO🚧 clarify (Hideyuki Tachibana, Ono, and Sagayama 2014; Driedger, Muller, and Ewert 2014; FitzGerald et al. 2013; Lakatos 2000; N. Ono et al. 2008; Fitzgerald 2010; H. Tachibana et al. 2012; Driedger, Müller, and Disch 2014; Nobutaka Ono et al. 2008; Schlüter and Böck 2014; Laroche et al. 2017; Elowsson and Friberg 2017; Driedger and Müller 2016)

2 Noise+sinusoids

That first step might be to find some model which can approximately capture the cyclic and disordered components of the signal. Indeed Metamorph and smstools, based on a “sinusoids+noise” model do this kind of decomposition, but they mostly use it for resynthesis in limited ways, not simulating realisations from the inferred model of an underlying stochastic process. There is an implementation in csound called ATS which looks interesting?

Some non-parametric conditional wavelet density sounds more fun to me, maybe as a Markov random field — although what exact generative model I would fit is still opaque to me. The sequence probably possesses multiple at scales, and there is evidence that music might have a recursive grammatical structure which would be hard to learn even if we had a perfect decomposition.

3 Incoming

Live examples: