Probability divergences

Metrics, contrasts and divergences and other ways of quantifying how similar are two randomnesses

November 25, 2014 — January 5, 2023

Suspiciously similar content

Quantifying the difference between probability measures. Measuring the distribution itself, for, e.g. badness of approximation of a statistical fit. The theory of binary experiments. You probably care about these because you want to work with empirical observations of data drawn from a given distribution, to test for independence or do hypothesis testing or model selection, or density estimation, or to model convergence for some random variable, or probability inequalities, or to model the distinguishability of the distributions from some process and a generative model of it, as seen in generative adversarial learning. That kind of thing. Frequently the distance here is between a measure and an empirical estimate thereof, but this is no requirement. Distances between two empirical distributions and between two analytic distributions are all useful in practice.

This notebook is elderly now and I wish I’d written it differently. In particular, I wish I had started from the idea that we care about discrepancies between random variates and also differences between the distributions of random variates and that these are slightly different objects. Maybe I will get a chance to come back and explain that at some point.

I should discuss these objects in terms of which ones are conveniently estimated from empirical samples of a function, which ends up being important in my daily life.

A good choice of probability metric might give us a convenient distribution of a test statistic, an efficient loss function to target, simple convergence behaviour for some class of estimator, or simply a warm fuzzy glow.

Terminology note: “Distance” and “metric” both often imply symmetric functions obeying the triangle inequality, but on this page our church is broad, and includes pre-metrics, metric-like functions which still “go to zero when two things get similar”, without including the other axioms of distances. These are also called divergences. This is still useful for the aforementioned convergence results. I’ll use “true metric” or “true distance” to make it clear when needed. “Contrast” is probably better here, but is less common.

🏗 talk about triangle inequalities.

🏗 talk about Portmanteau theorems.

1 Overview

nle;dr Don’t read my summary, read the summaries I summarize. One interesting one, although it pre-dated the renewed mania for Wasserstein metrics, is the Reid and Williamson epic, (Reid and Williamson 2011), which, in the quiet solitude of my own skull, I refer to as One regret to rule them all and in divergence bound them.

There is also a useful omnibus of classic relations in Gibbs and Su:

Relationships among probability metrics. A directed arrow from A to B annotated by a function \(h(x)\) means that \(d_A \leq h(d_B)\). The symbol diam Ω denotes the diameter of the probability space Ω; bounds involving it are only useful if \(\Omega\) is bounded. For Ω finite, \(d_{\text{min}} = \inf_{x,y\in\Omega} d(x,y).\) The probability metrics take arguments μ,ν; “ν dom μ” indicates that the given bound only holds if ν dominates μ. […]

Yuling Yao gives us an intuition by considering point mass approximations

- The mean of posterior density minimizes the L2 risk. The mode of the posterior density minimizes the KL divergence to it. … Put it in another way, the MAP is always the spiky variational inference approximation to the exact posterior density.

- …The posterior median minimizes the Wasserstein metric for order 1 and the posterior mean minimizes the Wasserstein metric for order 2.

2 Norms of density with respect to Lebesgue measure on the state space

Well now, this is a fancy name that I use to keep things straight in my head. Probably the most familiar type of metric to many, as it’s a vanilla functional norm-induced metric applied to probability densities on the state space of the random variable.

The “usual” norms can be applied to density, Most famously, \(L_p\) norms (which I will call \(L_k\) norms because I am using \(p\)).

When written like this, the norm is taken between density functions, i.e. Radon-Nikodym derivatives, not distributions. (Although see the Kolmogorov metric for an application of the \(k=\infty\) norm to cumulative distribution functions.)

A little more generally, consider some RV \(X\sim P\) taking values on \(\mathbb{R}\) with a Radon-Nikodym derivative (a.k.a. density) continuous with respect to the Lebesgue measure \(\lambda\), \(p=dP/d\lambda\).

\[\begin{aligned} L_k(P,Q)&:= \left\|\frac{dP-dQ}{d\lambda}\right\|_k\\ &=\left[\int \left(\frac{dP-dQ}{d\lambda}\right)^k d\lambda\right]^{1/k}\\ &=\mathbb{E}\left[\frac{dP-dQ}{d\lambda}^k \right]^{1/k} \end{aligned}\]

\(L_2\) norms are classics for kernel density estimates, because it allows you to use lots of tasty machinery of spectral function approximation.

\(L_k, k\geq 1\) norms do observe the triangle inequality, and \(L_2\) norms have lots of additional features, such as Wiener filtering formulations, and Parseval’s identity, and you get a convenient Hilbert space for free.

There are the standard facts about \(L_k,\,k\geq 1\) spaces (i.e. expectation of arbitrary measurable functions), e.g. domination

\[k>1 \text{ and } j>k \Rightarrow \|f\|_k\geq\|g\|_j\]

Hölder’s inequality for probabilities

\[1/k + 1/j \leq 1 \Rightarrow \|fg\|_1\leq \|f\|_k\|g\|_j\]

and the Minkowski (i.e. triangle) inequality

\[\|x+y\|_k \leq \|x\|_k+\|y\|_k\]

However, it’s an awkward choice for a distance on a probability space, the \(L_k\) space on densities.

If you transform the random variable by anything other than a linear transform, then your distances transform in an arbitrary way. And we haven’t exploited the non-negativity of probability densities so it might feel as if we are wasting some information — If our estimated density \(q(x)<0,\;\forall x\in A\) for some non-empty interval \(A\) then we know it’s plain wrong, since probability is never negative.

Also, such norms are not necessarily convenient. Exercise: Given \(N\) i.i.d samples drawn from \(X\sim P= \text{Norm}(\mu,\sigma)\), find a closed form expression for estimates \((\hat{\mu}_N, \hat{\sigma}_N)\) such that the distance \(E_P\|(p-\hat{p})\|_2\) is minimised.

Doing this directly is hard; But indirectly can work — if we try to directly minimise a different distance, such as the KL divergence, we can squeeze the \(L_2\) distance. 🏗 come back to this point.

Finally, these feel like setting up an inappropriate problem to solve statistically, since an error is penalised equally everywhere in the state-space; Why are errors penalised just as much for where \(p\simeq 0\) as for \(p\gg 0\)? Surely there are cases where we care more, or less, about such areas? That leads to, for example…

3 Relative distributions

Why characterise a difference in distributions by a summary statistic? Just have an object which is a relative distribution.

4 \(\phi\)-divergences

Why not call \(P\) close to \(Q\) if closeness depends on the probability weighting of that place? Specifically, some divergence \(R\) like this, using scalar function \(\phi\) and pointwise loss \(\ell\)

\[R(P,Q):=\psi(E_Q(\ell(p(x), q(x))))\]

If we are going to measure divergence here, we also want the properties that \(P=Q\Rightarrow R(P,Q)=0\), and \(R(P,Q)> 0 \Rightarrow P\neq Q\). We can get this if we chose some increasing \(\psi\) and \(\ell(s,t)\) such that

\[ \begin{aligned} \begin{array}{rl} \ell(s,t) \geq 0 &\text{ for } s\neq t\\ \ell(s,t)=0 &\text{ for } s=t\\ \end{array} \end{aligned} \]

Let \(\psi\) be the identity function for now, and concentrate on the fiddly bit, \(\ell\). We try a form of function that exploits the non-negativity of densities and penalises the derivative of one distribution with respect to the other (resp. the ratio of densities):

\[\ell(s,t) := \phi(s/t)\]

If \(p(x)=q(x)\) then \(q(x)/p(x)=1\). So to get the right sort of penalty, we choose \(\phi\) to have a minimum where the argument is 1, \(\phi(1)=0\) and \(\phi(t)\geq 0, \forall t\)

It turns out that it’s also wise to take \(\phi\) to be convex. (Exercise: why?) And, note that for these not to explode we now require \(P\) be dominated by \(Q.\) (i.e. \(Q(A)=0\Rightarrow P(A)=0,\, \forall A \in\text{Borel}(\mathbb{R})\)

Putting this all together, we have a family of divergences

\[D_\phi(P,Q) := E_Q\phi\left(\frac{dP}{dQ}\right)\]

And BAM! These are the \(\phi\)-divergences. You get a different one for each choice of \(\phi\).

a.k.a. Csiszár-divergences, \(f\)-divergences or Ali-Silvey distances, after the people who noticed them. (I. Csiszár 1972; Ali and Silvey 1966)

These are in general mere pre-metrics. And note they are no longer in general symmetric - We should not necessarily expect

\[D_\phi(Q,P) = E_P\phi\left(\frac{dQ}{dP}\right)\]

to be equal to

\[D_\phi(P,Q) = E_Q\phi\left(\frac{dP}{dQ}\right)\]

Anyway, back to concreteness, and recall our well-behaved continuous random variables; we can write, in this case,

\[D_\phi(P,Q) = \int_\mathbb{R}\phi\left(\frac{p(x)}{q(x)}\right)q(x)dx\]

Let’s explore some \(\phi\)s.

4.1 Kullback-Leibler divergence

We take \(\phi(t)=t \ln t\), and write the corresponding divergence, \(D_\text{KL}=\operatorname{KL}\),

\[\begin{aligned} \operatorname{KL}(Q,P) &= E_Q\phi\left(\frac{p(x)}{q(x)}\right) \\ &= \int_\mathbb{R}\phi\left(\frac{p(x)}{q(x)}\right)q(x)dx \\ &= \int_\mathbb{R}\left(\frac{p(x)}{q(x)}\right)\ln \left(\frac{p(x)}{q(x)}\right) q(x)dx \\ &= \int_\mathbb{R} \ln \left(\frac{q(x)}{p(x)}\right) p(x)dx \end{aligned}\]

Indeed, if \(P\) is absolutely continuous wrt \(Q\),

\[\operatorname{KL}(P,Q) = E_Q\log \left(\frac{dP}{dQ}\right)\]

This is one of many possible derivations of the Kullback-Leibler divergence a.k.a. KL divergence, or relative entropy; It pops up because of, e.g., information-theoretic significance.

🏗 revisit in maximum likelihood and variational inference settings, where we have good algorithms exploiting its nice properties.

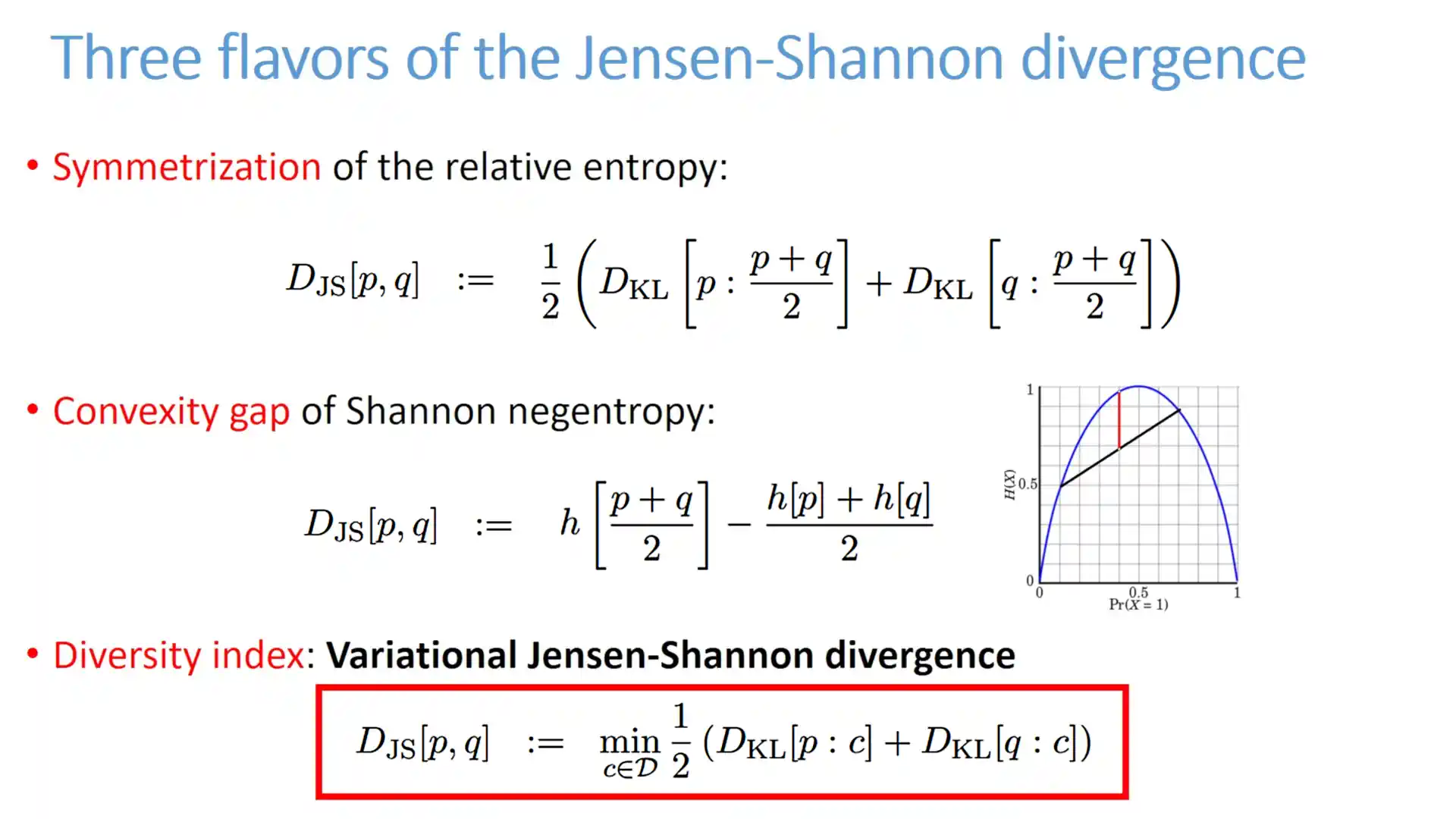

4.2 Jensen-Shannon divergence

Symmetrized version of KL divergence.

4.3 Total variation distance

Take \(\phi(t)=|t-1|\). We write \(\delta(P,Q)\) for the divergence. I will use the set \(A:=\left\{x:\frac{dP}{dQ}\geq 1\right\}=\{x:dP\geq dQ\}.\)

\[\begin{aligned} \delta(P,Q) &= E_Q\left|\frac{dP}{dQ}-1\right| \\ &= \int_A \left(\frac{dP}{dQ}-1 \right)dQ - \int_{A^C} \left(\frac{dP}{dQ}-1 \right)dQ\\ &= \int_A \frac{dP}{dQ} dQ - \int_A 1 dQ - \int_{A^C} \frac{dP}{dQ}dQ + \int_{A^C} 1 dQ\\ &= \int_A dP - \int_A dQ - \int_{A^C} dP + \int_{A^C} dQ\\ &= P(A) - Q(A) - P(A^C) + Q(A^C)\\ &= 2[P(A) - Q(A)] \\ &= 2[Q(A^C) - P(A^C)] \\ \text{ i.e. } &= 2\left[P(\{dP\geq dQ\})-Q(\{dQ\geq dP\})\right] \end{aligned}\]

I also have the standard fact that for any probability measure \(P\) and \(P\)-measurable set, \(A\), it holds that \(P(A)=1-P(A^C)\).

Equivalently

\[\delta(P,Q) :=\sup_{B \in \sigma(Q)} \left\{ |P(B) - Q(B)| \right\}\]

To see that \(A\) attains that supremum, we note for any set \(B\supseteq A,\, B:=A\cup D\) for some \(Z\) disjoint from \(A\), it follows that \(|P(B) - Q(B)|\leq |P(A) - Q(A)|\) since, on \(Z,\, dP/dQ\leq 1\), by construction.

It should be clear that this is symmetric.

Supposedly, (Khosravifard, Fooladivanda, and Gulliver 2007) show that this is the only possible f-divergence which is also a true distance, but I can’t access that paper to see how.

🏗 Prove that for myself — Is the representation of divergences as “simple” divergences helpful? See (Reid and Williamson 2009) (credited to Österreicher and Wajda.)

Interestingly, as Djalil Chafaï points out,

\[ \delta(P,Q) =\inf_{X \sim P,Y \sim Q}\mathbb{P}(X\neq Y) \]

4.4 Hellinger divergence

For this one, we write \(H^2(P,Q)\), and take \(\phi(t):=(\sqrt{t}-1)^2\). Step-by-step, that becomes

\[\begin{aligned} H^2(P,Q) &:=E_Q \left(\sqrt{\frac{dP}{dQ}}-1\right)^2 \\ &= \int \left(\sqrt{\frac{dP}{dQ}}-1\right)^2 dQ\\ &= \int \frac{dP}{dQ} dQ -2\int \sqrt{\frac{dP}{dQ}} dQ +\int dQ\\ &= \int dP -2\int \sqrt{\frac{dP}{dQ}} dQ +\int dQ\\ &= \int \sqrt{dP}^2 -2\int \sqrt{dP}\sqrt{dQ} +\int \sqrt{dQ}^2\\ &=\int (\sqrt{dP}-\sqrt{dQ})^2 \end{aligned}\]

It turns out to be another symmetrical \(\phi\)-divergence. The square root of the Hellinger divergence \(H=\sqrt{H^2}\) is the Hellinger distance on the space of probability measures which is a true distance. (Exercise: prove.)

It doesn’t look intuitive, but has convenient properties for proving inequalities (simple relationships with other norms, triangle inequality) and magically good estimation properties (Beran 1977), e.g. in robust statistics.

🏗 make some of these “convenient properties” explicit.

For now, see Djalil who defines both Hellinger distance

\[\mathrm{H}(\mu,\nu) ={\Vert\sqrt{f}-\sqrt{g}\Vert}_{\mathrm{L}^2(\lambda)} =\Bigr(\int(\sqrt{f}-\sqrt{g})^2\mathrm{d}\lambda\Bigr)^{1/2}.\]

and Hellinger affinity

\[\mathrm{A}(\mu,\nu) =\int\sqrt{fg}\mathrm{d}\lambda, \quad \mathrm{H}(\mu,\nu)^2 =2-2A(\mu,\nu).\]

4.5 \(\alpha\)-divergence

a.k.a Rényi divergences, which are a subfamily of the f divergences with a particular parameterisation. Includes KL, reverse-KL and Hellinger as special cases.

We take \(\phi(t):=\frac{4}{1-\alpha^2} \left(1-t^{(1+\alpha )/2}\right).\)

This gets fiddly to write out in full generality, with various undefined or infinite integrals needing definitions in terms of limits and is supposed to be constructed in terms of “Hellinger integral”…? I will ignore that for now and write out a simple enough version. See (Liese and Vajda 2006; van Erven and Harremoës 2014) for gory details.

\[D_\alpha(P,Q):=\frac{1}{1-\alpha}\log\int \left(\frac{p}{q}\right)^{1-\alpha}dP\]

4.6 \(\chi^2\) divergence

As made famous by count data significance tests.

For this one, we write \(\chi^2\), and take \(\phi(t):=(t-1)^2\). Then, by the same old process…

\[\begin{aligned} \chi^2(P,Q) &:=E_Q \left(\frac{dP}{dQ}-1\right)^2 \\ &= \int \left(\frac{dP}{dQ}-1\right)^2 dQ\\ &= \int \left(\frac{dP}{dQ}\right)^2 dQ - 2 \int \frac{dP}{dQ} dQ + \int dQ\\ &= \int \frac{dP}{dQ} dP - 1 \end{aligned}\]

Normally you see this for discrete data indexed by \(i\), in which case we may write

\[\begin{aligned} \chi^2(P,Q) &= \left(\sum_i \frac{p_i}{q_i} p_i\right) - 1\\ &= \sum_i\left( \frac{p_i^2}{q_i} - q_i\right)\\ &= \sum_i \frac{p_i^2-q_i^2}{q_i}\\ \end{aligned}\]

If you have constructed these discrete probability mass functions from \(N\) samples, say, \(p_i:=\frac{n^P_i}{N}\) and \(q_i:=\frac{n^Q_i}{N}\), this becomes

\[\chi^2(P,Q) = \sum_i \frac{(n^P_i)^2-(n^Q_i)^2}{Nn^Q_i}\]

This is probably familiar from some primordial statistics class.

The main use of this one is its ancient pedigree, (used by Pearson in 1900, according to Wikipedia) and its non-controversiality, so you include it in lists wherein you wish to mention you have a hipper alternative.

🏗 Reverse Pinsker inequalities (e.g. (Berend, Harremoës, and Kontorovich 2012)), and covering numbers and other such horrors.

4.7 Hellinger inequalities

Wrt the total variation distance,

\[H^2(P,Q) \leq \delta(P,Q) \leq \sqrt 2 H(P,Q)\,.\]

\[H^2(P,Q) \leq \operatorname{KL}(P,Q)\]

Additionally,

\[0\leq H^2(P,Q) \leq H(P,Q) \leq 1\]

4.8 Pinsker inequalities

(Berend, Harremoës, and Kontorovich 2012) attribute this to Csiszár (1967 article I could not find) and Kullback (Kullback 1970, 1967) instead of (Pinsker 1980) (which is in any case in Russian and I haven’t read it).

\[\delta(P,Q) \leq \sqrt{\frac{1}{2} D_{K L}(P\|Q)}\]

(Reid and Williamson 2009) derive the best-possible generalised Pinsker inequalities, in a certain sense of “best” and “generalised”, i.e. they are tight bounds, but not necessarily convenient.

Here are the most useful 3 of their inequalities: (\(P,Q\) arguments omitted)

\[\begin{aligned} H^2 &\geq 2-\sqrt{4-\delta^2} \\ \chi^2 &\geq \mathbb{I}\{\delta< 1\}\delta^2+\mathbb{I}\{\delta< 1\}\frac{\delta}{2-\delta}\\ \operatorname{KL} &\geq \min_{\beta\in [\delta-2,2-\delta]}\left(\frac{\delta+2-\beta}{4}\right) \log\left(\frac{\beta-2-\delta}{\beta-2+\delta}\right) + \left(\frac{\beta+2-\delta}{4}\right) \log\left(\frac{\beta+2-\delta}{\beta+2+\delta}\right) \end{aligned}\]

5 Stein discrepancies

Especially kernelized. A discrepancy arising from Stein’s method, which is famous for its use in Stein Variational Gradient Descent. Xu and Matsuda (2021) summarises:

Let \(q\) be a smooth probability density on \(\mathbb{R}^{d} .\) For a smooth function \(\mathbf{f}=\) \(\left(f_{1}, \ldots, f_{d}\right): \mathbb{R}^{d} \rightarrow \mathbb{R}^{d}\), the Stein operator \(\mathcal{T}_{q}\) is defined by \[ \mathcal{T}_{q} \mathbf{f}(x)=\sum_{i=1}^{d}\left(f_{i}(x) \frac{\partial}{\partial x^{i}} \log q(x)+\frac{\partial}{\partial x^{i}} f_{i}(x)\right) \]

…Let \(\mathcal{H}\) be a reproducing kernel Hilbert space \((\mathrm{RKHS})\) on \(\mathbb{R}^{d}\) and \(\mathcal{H}^{d}\) be its product. By using Stein operator, kernel Stein discrepancy (KSD) (Gorham and Mackey 2015; Ley, Reinert, and Swan 2017) between two densities \(p\) and \(q\) is defined as \[ \operatorname{KSD}(p \| q)=\sup _{\|\mathbf{f}\|_{\mathcal{H}} \leq 1} \mathbb{E}_{p}\left[\mathcal{T}_{q} \mathbf{f}\right] \] It is shown that \(\operatorname{KSD}(p \| q) \geq 0\) and \(\mathrm{KSD}(p \| q)=0\) if and only if \(p=q\) under mild regularity conditions (Chwialkowski, Strathmann, and Gretton 2016). Thus, KSD is a proper discrepancy measure between densities. After some calculation, \(\operatorname{KSD}(p \| q)\) is rewritten as \[ \operatorname{KSD}^{2}(p \| q)=\mathbb{E}_{x, \tilde{x} \sim p}\left[h_{q}(x, \tilde{x})\right] \] where \(h_{q}\) does not involve \(p\).

See more under Stein Variational Gradient Descent.

6 Integral probability metrics

\[ D_{\mathcal{H}}(P, Q)=\sup _{g \in \mathcal{H}}\left|\mathbf{E}_{X \sim P} g(X)-\mathbf{E}_{Y \sim Q} g(Y)\right| \]

Included:

- Total Variation

- Optimal transport metrics

- Maximum mean discrepancy.

- others based on the function class

7 Wasserstein distances

8 Bounded Lipschitz distance

This monster metrizes convergence in distribution. a.k.a. I think, Lévy metric. Everyone cites Dudley (2002) for this. Looks like maybe this is just a special Wasserstein distance, but I need to check.

TODO: harmonize notation.

\[D_L(P,Q) := \inf\{\epsilon >0: P(x-\epsilon)-\epsilon \leq Q(x)\leq P(x+\epsilon)+\epsilon\} \]

Piccoli and Rossi (2016) summarises:

Recall that the flat metric or bounded Lipschitz distance is defined as follows: \[ d(\mu, \nu):=\sup \left\{\int_{\mathbb{R}^{d}} f d(\mu-\nu) \mid\|f\|_{C^{0}} \leq 1,\|f\|_{L i p} \leq 1\right\} \] We first show that the generalized Wasserstein distance \(W_{1}^{1,1}\) coincides with the flat metric. This provides the following duality formula: \[ d(\mu, \nu)=W_{1}^{1,1}(\mu, \nu)=\inf _{\tilde{\mu}, \tilde{\nu} \in \mathcal{M},|\tilde{\mu}|=|\tilde{\nu}|}|\mu-\tilde{\mu}|+|\nu-\tilde{\nu}|+W_{1}(\tilde{\mu}, \tilde{\nu}) \] This result can be seen as a generalization of the Kantorovich-Rubinstein theorem, which provides the duality: \[ W_{1}(\mu, \nu)=\sup \left\{\int_{\mathbb{R}^{d}} f d(\mu-\nu) \mid\|f\|_{L i p} \leq 1\right\}. \]

Ummmm. 🏗️.

9 Fisher distances

Specifically \((p,\nu)\)-Fisher distances, in the terminology of (J. H. Huggins et al. 2018). They use these distances as a computationally tractable proxy for Wasserstein distance.

For a Borel measure \(\nu\), let \(L_p(\nu)\) denote the space of functions that are \(p\)-integrable with respect to \[ \nu: \phi \in L_p(\nu) \Leftrightarrow \|\phi\|Lp(\nu) = (\int\phi(\theta)p\nu(d\theta)))^{1/p} < \infty \]. Let \(U = − \log d\eta/d\theta\) and \(\hat{U} = − \log d\hat{\eta}/d\theta\) denote the potential energy functions associated with, respectively, \(\eta\) and \(\hat{\eta}\).

Then the \((p, \nu)\)-Fisher distance is given by \[\begin{aligned} d_{p,\nu}(\eta,\hat{\eta}) &=\big\|\|\nabla U−\nabla U\|_2\big\|_{L^p(\nu)}\\ &= \left(\int\|\nabla U(\theta)−\nabla U(\theta)\|_2^p\nu(d\theta)\right)^{1/p}. \end{aligned}\]

This avoids an inconvenient posterior normalising calculation in Bayes.

10 Others

- “P-divergence”

- Metrizes convergence in probability. Note this is defined upon random variables with an arbitrary joint distribution, not upon two distributions per se.

- Kolmogorov metric

-

the \(L_\infty\) metric between the cumulative distributions (i.e. not between densities)

\[D_K(P,Q):= \sup_x \left\{ |P(x) - Q(x)| \right\}\]

Nonetheless it does look similar to Total Variation, doesn’t it?

- Skorokhod

- Hmmm.

What even are the Kuiper and Prokhorov metrics?

11 Induced topologies

There is a synthesis of the importance of the topologies induced by each of these metrics, which I read in (Arjovsky, Chintala, and Bottou 2017), and which they credit to (Billingsley 2013; Villani 2009).

In this paper, we direct our attention on the various ways to measure how close the model distribution and the real distribution are, or equivalently, on the various ways to define a distance or divergence \(\rho(P_{\theta},P_{r})\). The most fundamental difference between such distances is their impact on the convergence of sequences of probability distributions. A sequence of distributions \((P_{t}) _{t\in \mathbb{N}}\) converges if and only if there is a distribution \(P_{\infty}\) such that \(\rho(P_{\theta}, P_{r} )\) tends to zero, something that depends on how exactly the distance \(\rho\) is defined. Informally, a distance \(\rho\) induces a weaker topology when it makes it easier for a sequence of distribution to converge. […]

In order to optimize the parameter \({\theta}\), it is of course desirable to define our model distribution \(P_{\theta}\) in a manner that makes the mapping \({\theta} \mapsto P_{\theta}\) continuous. Continuity means that when a sequence of parameters \(\theta_t\) converges to \({\theta}\), the distributions \(P_{\theta_t}\) also converge to \(P{\theta}.\) […] If \(\rho\) is our notion of distance between two distributions, we would like to have a loss function \(\theta \mapsto\rho(P_{\theta},P_r)\) that is continuous […]

12 To read

- This guy’s study blog

- Anand Sarwate: C.R. Rao and information geometry

- (Berend, Harremoës, and Kontorovich 2012) on reverse Pinsker inequalities.

The GeomLoss library provides efficient GPU implementations for:

- Kernel norms (also known as Maximum Mean Discrepancies).

- Hausdorff divergences, which are positive definite generalizations of the Chamfer-ICP loss and are analogous to log-likelihoods of Gaussian Mixture Models.

- Debiased Sinkhorn divergences, which are affordable yet positive and definite approximations of Optimal Transport (Wasserstein) distances.

It is hosted on GitHub and distributed under the permissive MIT license.

GeomLoss functions are available through the custom PyTorch layers SamplesLoss, ImagesLoss and VolumesLoss which allow you to work with weighted point clouds (of any dimension), density maps and volumetric segmentation masks.