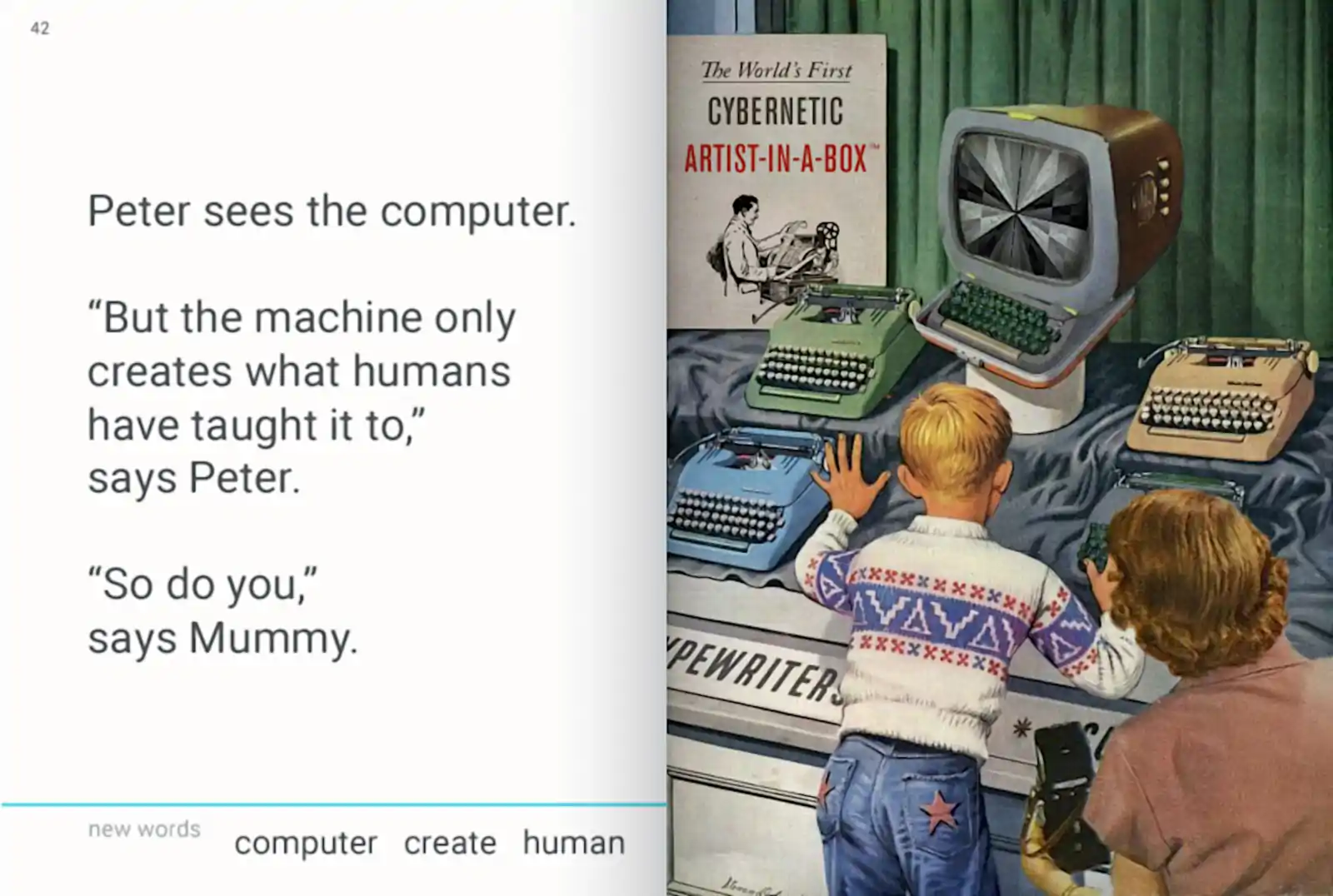

Here’s how I would do art with machine learning if I had to

June 6, 2016 — February 1, 2022

Suspiciously similar content

I’ve a weakness for ideas that give me plausible deniability for making generative art while doing my maths homework.

NB I have recently tidied this page up but the content is not fresh; there is too much happening in the field to document.

This page is more chaotic than the already-chaotic median, sorry. Good luck making sense of it. The problem is that this notebook is in the anti-sweet spot of “stuff I know too much about to need notes but not working on enough to promote”.

Some neural networks are generative, in the sense that if you train ’em to classify things, they can also predict new members of the class. e.g. run the model forwards, it recognises melodies; run it “backwards”, it composes melodies. Or rather, you maybe trained them to generate examples in the course of training them to detect examples. There are many definitional and practical wrinkles, and this ability is not unique to artificial neural networks, but it is a great convenience, and the gods of machine learning have blessed us with much infrastructure to exploit this feature, because it is close to actual profitable algorithms. Upshot: There is now a lot of computation and grad student labour directed at producing neural networks which as a byproduct can produce faces, chairs, film dialogue, symphonies and so on.

Perhaps other people will be more across this?

Oh and also google’s AMI channel, and ml4artists, which publishes sweet machine learning for artists topic guides.

There are NeurIPS streams about this now.

1 Visual synthesis

There is a lot going on, which I should triage. Important example: Maybe I should do generative art with neural diffusion networks.

1.1 AI image editors

1.2 Style transfer and deep dreaming

You can do style transfer a number of ways, including NN inversion and GANs.

See those classic images from google’s tripped-out image recognition systems. Here’s a good explanation of what is going on.

-

Our mission is to provide a novel artistic painting tool that allows everyone to create and share artistic pictures with just a few clicks. All you need to do is upload a photo and choose your favourite style. Our servers will then render your artwork for you.

For example, OPEN_NSFW was my favourite (NSFW).

Differentiable Image Parameterizations looks at style transfer with respect to different decompositions of the image surface. (There is stuff to follow up about checkerboard artefacts in NNs which I suspect is generally important.)

Self-Organising Textures stitches these two together, using a VGG discriminator as a loss function for training textures.

Deep dream generator does the classic deep-dreaming style perturbations

1.3 GANs

Clever uses of GANs that are not style transfer.

- Adversarial generation is a cool hack if you hate boring stuff like labelling data sets e.g. chair generation

- big-sleep: A simple command line tool for text to image generation, using OpenAI’s CLIP and a BigGAN (HT Ben Leighton for pointing this out)

- iGAN, iGAN: Interactive Image Generation via Generative Adversarial Networks

- interpolating style transfer.

- progressive_growing_of_gans is neat, generating infinite celebrities at high resolution. (Karras et al. 2017)

1.4 Incoming

- Neural networks do a passable undergraduate Monet.

- messing with copyright lawyers’ minds by compressing films to vectors (More technical version)

- hardmaru explains Compositional pattern-producing network (Stanley 2007) These are apparently a classic type of implicit rep NNs. Probably one of the things that Jonathan McCabe has been producing for years.

- neurogram is a compact semi-untrained neural network image synthesis-in-the-browser

- hardmaru introduces artists to sophisticated neural networks in the browser.

- Growing Neural Cellular Automata looks at differentiable automata on a grid for prettiness.

- Finding Mona Lisa in the Game of Life

- artbreeder is quixotically old-school.

- Alex Graves, Generating Sequences With Recurrent Neural Networks, generates handwriting.

- Relatedly, sketch-rnn is reaaaally cute.

- Autoencoding beyond pixels using a learned similarity metric (Larsen et al. 2015). code

2 Text synthesis

At one point, Ross Gibson’s Adventures in narrated reality was a state-of-the-art text generation using RNNs. He even made a movie of a script generated that way. But now, massive transformer models have left it behind technologically, if not humorously.

3 Music

- GuitarML/NeuralPi: Raspberry Pi guitar pedal using neural networks to emulate real amps and pedals.

- Neural Networks Emulate Any Guitar Pedal For $120

3.1 Symbolic composition via scores/MIDI/etc

See ML for composition.