Distilling Neural Nets

December 14, 2020 — January 29, 2025

Suspiciously similar content

Apparently, you can train a model to emulate a fancier model, and this is useful?

AFAICT the core idea here is that once you have a model, it can produce new synthetic training data, and in particular, exactly the training data we want. The results can be surprisingly vastly better use of compute.

This technique aims to transfer knowledge from a large, complex model (the “teacher”) to a smaller, more efficient model (the “student”).

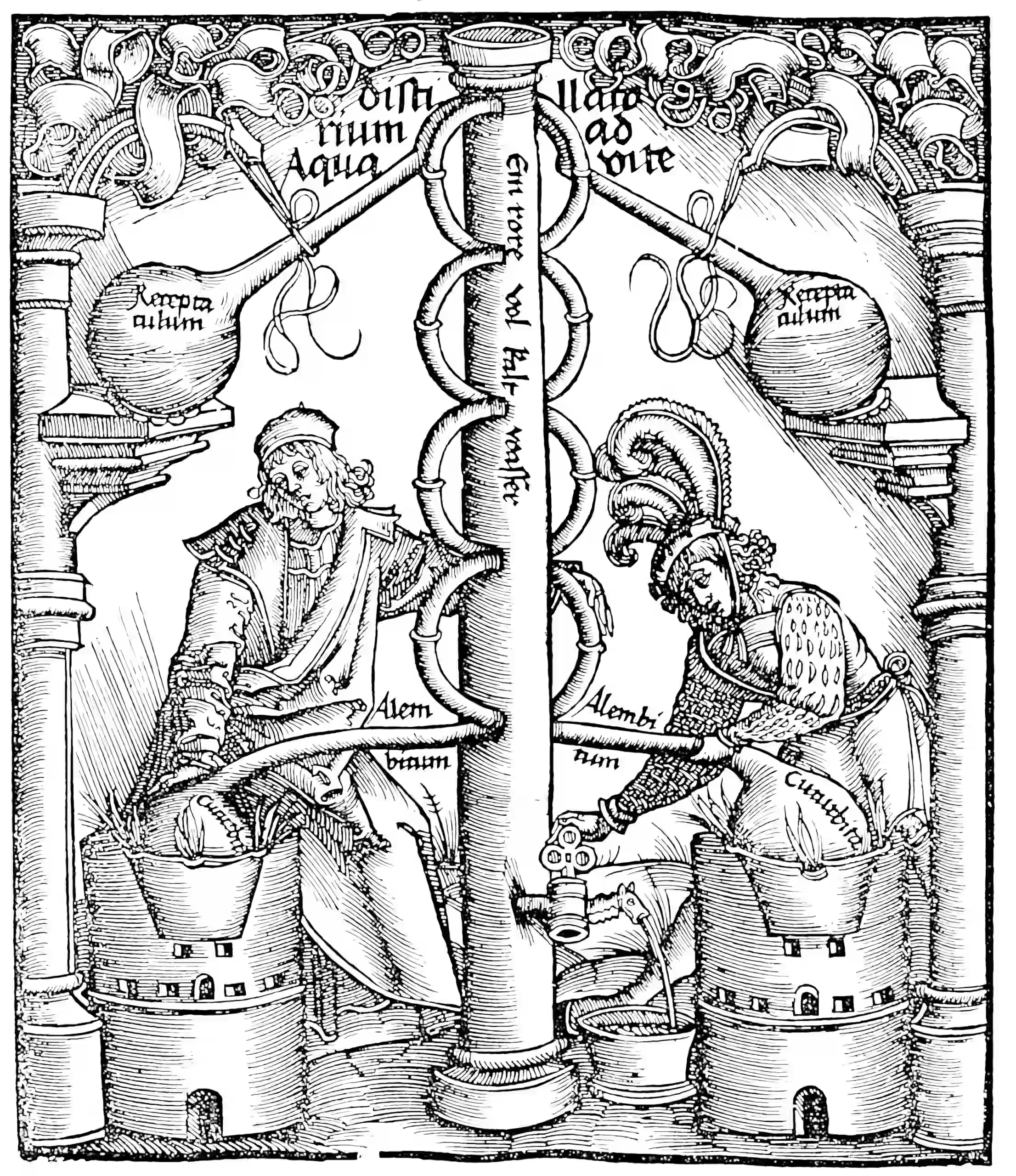

Great terminology here; Hinton, Vinyals, and Dean (2015) refers to distilling of dark knowledge.

See Bubeck on this: Three mysteries in deep learning: Ensemble, knowledge distillation, and self-distillation.

Quick AI summary for me from:

1 How Model Distillation Works

The general process of model distillation involves several key steps:

Teacher Model Selection: A large, pre-trained model (e.g., GPT-4) is chosen as the teacher.

Dataset Generation: The teacher model is used to generate outputs for a wide range of inputs, creating a dataset that captures its behaviour and decision-making patterns.

Student Model Training: A smaller model is trained to mimic the teacher’s responses using the generated dataset.

Knowledge Transfer: Various techniques are employed to transfer knowledge from the teacher to the student, including:

- Supervised Fine-Tuning (SFT): The student learns to replicate the teacher’s output behavior directly.

- Divergence and Similarity Methods: These aim to minimize the difference between the probability distributions of the teacher and student model outputs.

2 In LLMs

So hot right now. The process is especially valuable for LLMs, as it allows for the creation of more manageable and deployable models while retaining much of the performance of larger counterparts.

Weird stuff in LLMs that I would like to understand, extracted from LLM distillation demystified: a complete guide.

Rationale Extraction: Some approaches, like “distilling step-by-step,” extract not just the final output but also the reasoning process (rationale) from the teacher LLM, providing richer supervision for the student model.

Multi-task Framework: Distillation for LLMs often involves a multi-task framework, where the student model learns to perform multiple related tasks simultaneously, enhancing its overall capabilities.

Reverse KL Divergence: For generative language models, using reverse Kullback-Leibler divergence as the objective function can be more effective than the standard forward KL divergence, preventing the student model from overestimating low-probability regions of the teacher’s distribution.

Soft Targets: LLM distillation often uses soft targets (probability distributions) rather than hard labels, providing more nuanced information about the teacher’s decision-making process.

Feature-based and Relation-based Distillation: Advanced techniques for LLMs include learning from the teacher’s internal features or the relationships between inputs and outputs, rather than just mimicking final outputs.

Online and Self-distillation: Some approaches involve concurrent training of teacher and student models or even having a model distill knowledge to itself.

Scalability: Distillation techniques for LLMs have been shown to work across a wide range of model sizes, from 120M to 13B parameters.

By leveraging these techniques, researchers and practitioners can create smaller, more efficient LLMs that maintain much of the performance of their larger counterparts. This is particularly valuable for deploying AI in resource-constrained environments or applications requiring lower latency and cost.

See also (Gu et al. 2023; Hsieh et al. 2023).

3 Incoming

- Sky-T1: Train your own O1 preview model within $450

- LLM distillation demystified: a complete guide | Snorkel AI

- Distilling step-by-step: Outperforming larger language models with less training

- Knowledge Distillation for Large Language Models: A Deep Dive - Zilliz Learn

- Model Distillation

- Model Distillation for Large Language Models | Niklas Heidloff

- A pragmatic introduction to model distillation for AI developers

- What is Model Distillation?

- LLM distillation techniques to explode in importance in 2024

- Model distillation - OpenAI API