Gaussian processes on lattices

October 30, 2019 — February 27, 2022

Suspiciously similar content

\[ \renewcommand{\var}{\operatorname{Var}} \renewcommand{\dd}{\mathrm{d}} \renewcommand{\pd}{\partial} \renewcommand{\vv}[1]{\boldsymbol{#1}} \renewcommand{\mm}[1]{\mathrm{#1}} \renewcommand{\mmm}[1]{\mathrm{#1}} \renewcommand{\cc}[1]{\mathcal{#1}} \renewcommand{\oo}[1]{\operatorname{#1}} \renewcommand{\gvn}{\mid} \renewcommand{\II}[1]{\mathbb{I}\{#1\}} \renewcommand{\inner}[2]{\langle #1,#2\rangle} \renewcommand{\Inner}[2]{\left\langle #1,#2\right\rangle} \renewcommand{\norm}[1]{\| #1\|} \renewcommand{\Norm}[1]{\|\langle #1\right\|} \renewcommand{\argmax}{\operatorname{arg max}} \renewcommand{\argmin}{\operatorname{arg min}} \renewcommand{\omp}{\mathop{\mathrm{OMP}}} \]

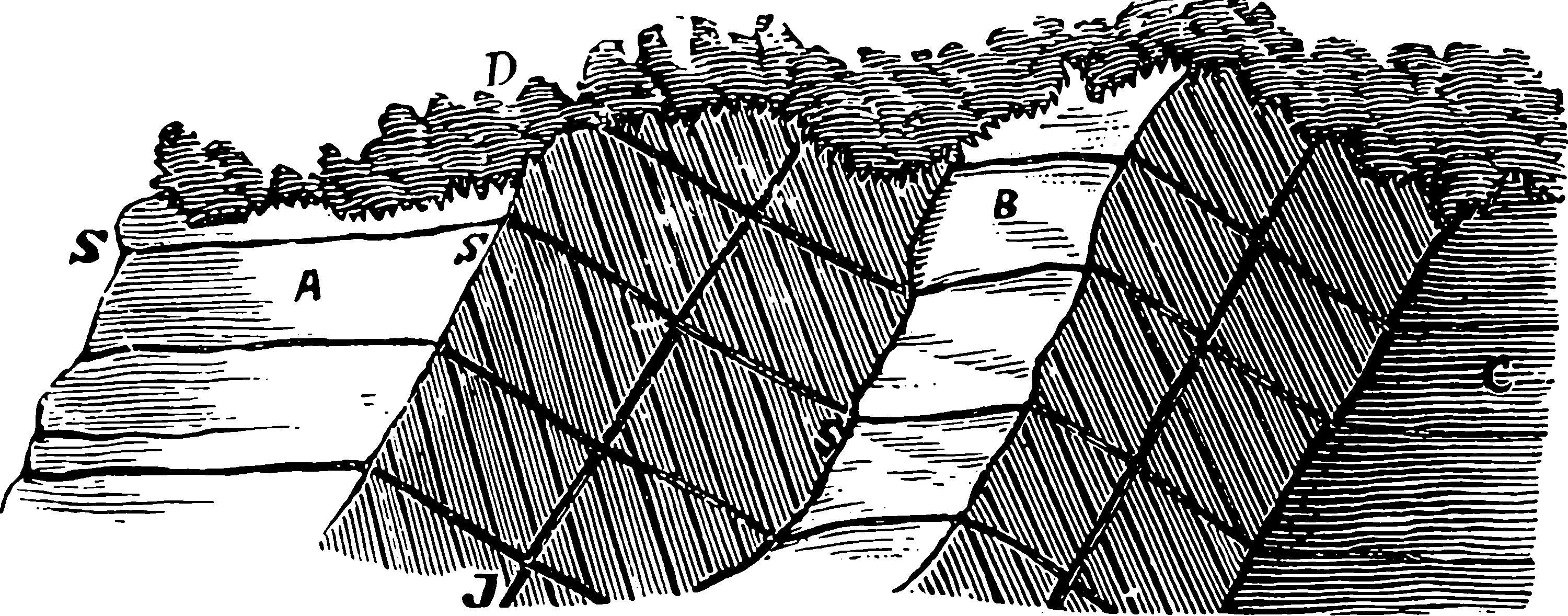

Gaussian Processes with a stationary kernel are faster if you are working on a grid of points. I have not used this trick, but I understand it involves various simplifications arising from the structure of Gram matrices, which end up being Kronecker products of Toeplitz matrices under lexical ordering of the input points. This is long words to describe a fairly simple thing. The keyword to highlight this is Kronecker inference in the ML literature (e.g. Saatçi 2012; Flaxman et al. 2015; A. G. Wilson and Nickisch 2015). Another keyword is circulant embedding, the approximation of a Toeplitz matrix by a circulant matrix, which apparently enables one to leverage fast Fourier transforms to calculate some quantities of interest, and some other nice linear algebra properties besides. That would imply that this method has its origins in Whittle likelihoods (P. Whittle 1953a; Peter Whittle 1952), if I am not mistaken.

Lattice GP methods complement, perhaps, the trick of filtering Gaussian processes, which can also exploit structured lattice inputs, although the setup is different between these.

TBC.

1 Simulating lattice Gaussian fields with the desired covariance structure

2 In regression

OK, so what does this allow us to do with posterior inference in GPs? Apparently quite a lot. The KISS-GP method (A. G. Wilson and Nickisch 2015) presumably leverages something similar. So do Saatçi (2012) and Flaxman et al. (2015).

Flaxman et al. (2015) deals with the lattice structure trick in a non-Gaussian likelihood setting using Laplace approximation.

TBC

3 Incoming

- Scaling multi-output Gaussian process models with exact inference

- Gaussian Process Latent Variable Model

- Cox Processes (w/ Pyro/GPyTorch Low-Level Interface)

- Latent Function Inference with Pyro + GPyTorch (Low-Level Interface)

- Exact DKL (Deep Kernel Learning) Regression w/ KISS-GP

- Exact GPs with Scalable (GPU) Inference — GPyTorch

- GP Regression with LOVE for Fast Predictive Variances and Sampling