Collaborative intelligence

Between humans and machines

September 12, 2021 — February 16, 2025

Suspiciously similar content

TODO Definition One workable definition is that collaborative intelligence revolves around humans and machines working together to achieve goals more effectively than either could do alone. Ideally, each side contributes complementary strengths: humans bring domain knowledge, empathy, and contextual understanding, while machines offer speed, scalability, and pattern-finding.

Related: complementary versus substitutive technology.

This page exists because it is a research topic at my workplace, not because I am researching it myself.

1 History

TBC: We can expand on a broader timeline:

- Early mechanical aids: The abacus and mechanical calculators illustrate how humans have long offloaded certain tasks to machines.

- Man-Computer Symbiosis (1960): J. C. R. Licklider proposed a future where people and machines form cooperative interactions.

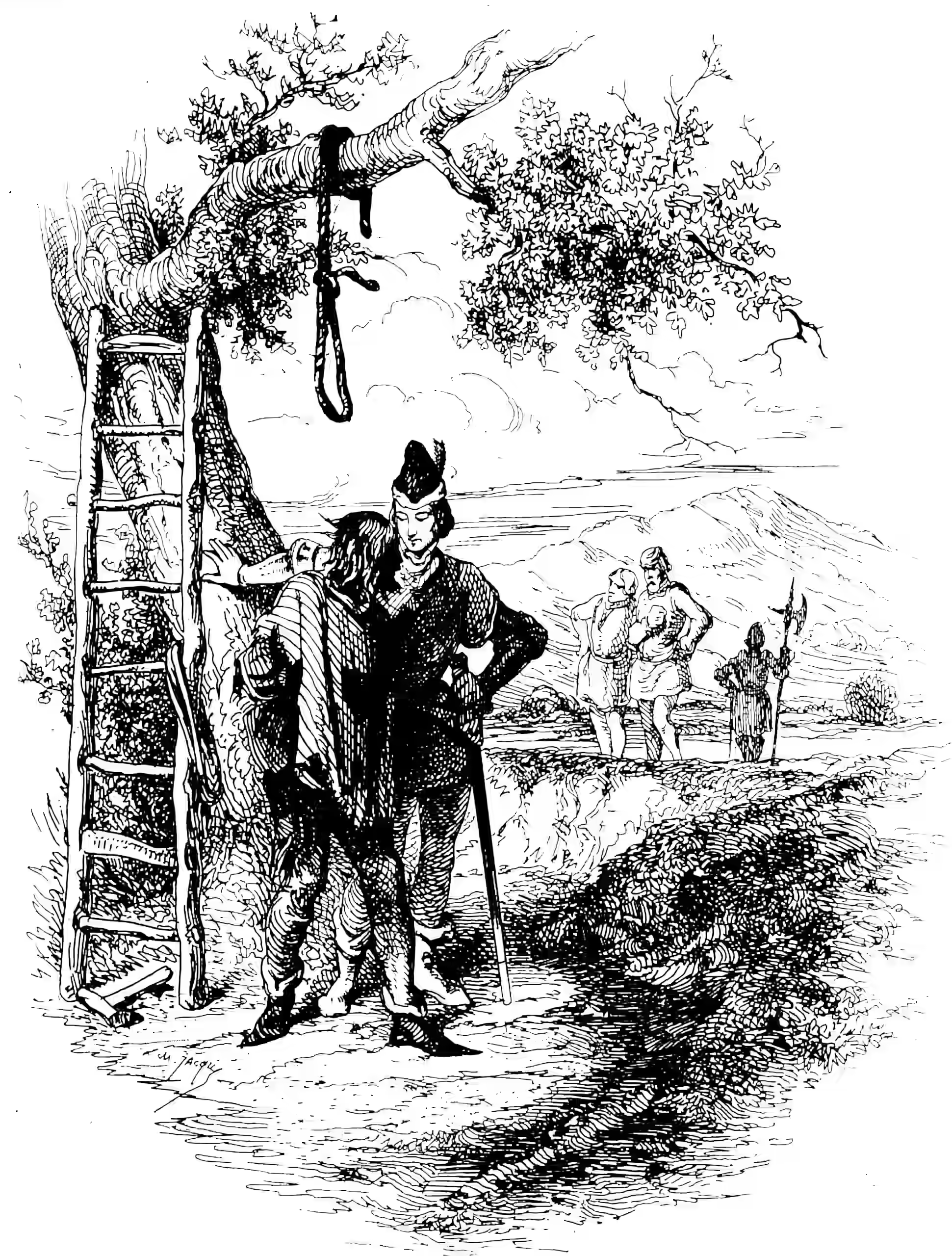

- Centaur Chess (1997): After Deep Blue defeated Garry Kasparov in chess, Kasparov introduced the notion of a “centaur”: a human teamed with a chess engine, often defeating either top humans or top engines alone.

- Modern synergy: With deep learning, large language models, and new forms of human-in-the-loop systems, the concept of collaborative intelligence has grown larger.

2 Human-in-the-loop learning

Human-in-the-loop systems integrate people at critical points:

- Data labelling & feedback: Humans supply correct labels to teach or correct AI models.

- Decision support: AI proposes actions; humans evaluate or override them when needed (as in medical imaging, content moderation).

- Iterative collaboration: Humans and models co-create solutions—for instance, generative AI for design, where the system proposes a design that humans refine.

For now, see adaptive design of experiments. *

TBC: Expand on success stories (e.g., medical diagnosis), the significance of RLHF (Reinforcement Learning from Human Feedback) in aligning AI systems with human values, and pitfalls like algorithmic bias and automation complacency (where humans over-rely on AI and become less vigilant). Discuss how these factors can lead to real-world errors, ethical concerns, and missed opportunities for improvement if not carefully addressed.

2.1 RLHF

Reinforcement Learning from Human Feedback (RLHF) explores the configuration space of possible human relevance. On one hand, it’s a way to make AI more in tune with what we actually want—humans give feedback, and the AI learns to align with our preferences. On the other hand, if RLHF works really well, we might automate ourselves out of the loop entirely. Or, it might be easier to hack the human reward functions than it is to improve the AI.

3 Our robot regency

How long will it be worthwhile to augment humans instead of simply replacing them with fully autonomous systems? This debate is developed more in the robot regency. Some argue that complete automation is inevitable once systems outperform humans; others stress that certain human qualities—empathy, accountability, or creative leaps—remain indispensable.

4 Reverse-centaurs

A reverse-centaur is the mirror image of a “centaur” setup. Instead of humans enhanced by AI, humans end up being the menial fleshy actuators for an “AI overlord” that drives strategy or direction. Think of platform-based gig work, or scenarios in which humans feel more like cogs in a system. Notably:

TBC: Some see reverse-centaurs as a likely future, especially if AIs achieve enough agency to overshadow human decisions.

5 Incoming

- Lauren Oakden-Rayner, No Doctor Required: Autonomy, Anomalies, and Magic Puddings

- Are Model Explanations Useful in Practice? Rethinking How to Support Human-ML Interactions.

- How to Make Tech Products (that Don’t Cause Depression and War)

- Using Artificial Intelligence to Augment Human Intelligence

- Ethan Mollick, Centaurs and Cyborgs on the Jagged Frontier

- Tackling Collaboration Challenges in the Development of ML-Enabled Systems

- Susskind and Susskind (2018) (The Future of the Professions)

- Norman (1991) (Cognitive Artifacts)

- Krakauer, David. “Will A.I. Harm Us? Better to Ask How We’ll Reckon With Our Hybrid Nature”

- Danaher, John. “Competitive Cognitive Artifacts and the Demise of Humanity: A Philosophical Analysis”

- Life on the Grid (part 1) - by Roger’s Bacon